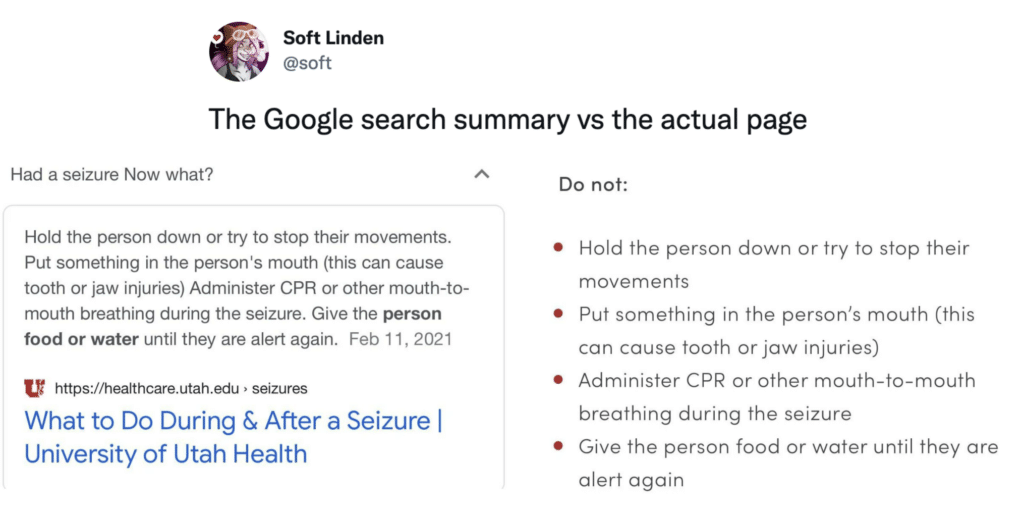

A recent tweet from Soft Linden illustrated the importance of strong responsible AI, governance and testing frameworks for organizations deploying public-facing machine learning applications.

Following a search for “had a seizure now what”, the tweet showed that Google’s “featured snippet” highlighted actions that a University of Utah healthcare site explicitly advised readers NOT to take.

Of course, it’s not exactly rare to see unhelpful examples of featured snippets, but this case was particularly egregious due to the injuries that could be experienced by those acting on this harmful advice.

While the accuracy of models is often a primary concern while they are being developed, there remains a lot of work to be done — both in development and in production — to ensure their safe and responsible use.

Basic Responsible AI Steps Every Organization Should Take

There are some basic steps all organizations should take to lay the foundation for the responsible use of AI. First and foremost, organizations need to define what responsible AI looks like for them and the users they serve. Once that definition is in place, companies need to commit to responsible AI creation and maintenance according to that definition. This will require an investment of time and resources to build knowledge, teams, and processes in support of this commitment. Ultimately, each organization must identify an approach to responsible AI that aligns with its business, leadership, products, culture, and many other factors.

In the case of featured snippets at Google, perhaps an unwillingness to slow down the pace of innovation to ensure responsible use may be at issue. After all, as Deb Raji pointed out in a follow-up tweet, the algorithm Google uses to generate featured snippets — BERT — has known issues with identifying negation. This suggests that issues like the one seen in this case were foreseeable.

We expect to see more such tools on offer over time, and we will continue to cover this important segment in the Solutions Guide.

Enter ML Model Monitoring and Governance Tools

Some of the work to mitigate the risks presented by AI will ultimately be performed by tools. Specialist tools like Arthur AI, Credo AI, Fiddler Labs, Parity, TruEra, and WhyLabs are available from young companies in the ML model monitoring and governance space. IBM Watson OpenScale is an example of a tool from a more established traditional IT vendor, and the major cloud AI suites such as AWS Sagemaker, Google Vertex AI, and Microsoft Azure Machine Learning all offer model monitoring capabilities as well.

We expect to see more such tools on offer over time, and we will continue to cover this important segment in the Solutions Guide.