Last week Facebook convened their inaugural PyTorch Developer Conference. Highlights of the conference included the release of PyTorch 1.0 beta and a host of ecosystem vendors announcing their support for the framework.

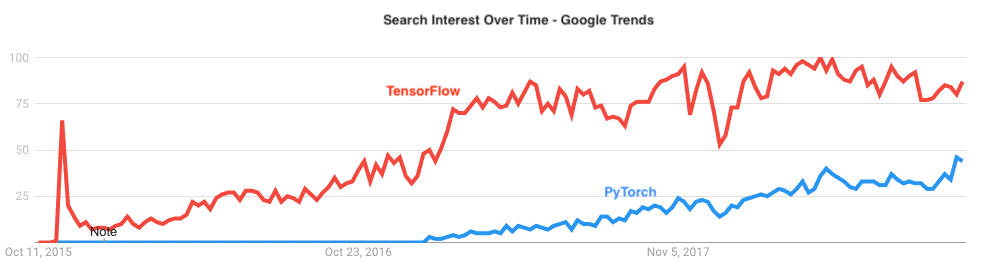

Early last year TensorFlow was the presumptive winner of the deep learning framework wars. Since then, PyTorch has grown dramatically in developer mindshare.

PyTorch’s popularity is driven in large part by a more gentle learning curve compared to TensorFlow. In particular, PyTorch adopted a more dynamic and “pythonic” approach to building neural networks that’s made it easier and more intuitive for Python’s large community developers and data scientists. TensorFlow on the other hand, while it’s most often used with Python, was originally written in C++ and adopts conventions that can feel unnatural to Python developers.

The two communities are jockeying for position via their respective project roadmaps. PyTorch 1.0, released this week at the inaugural PyTorch Developer Conference, adds much stronger support for running models in production, previously an area of considerable weakness relative to TensorFlow. And TensorFlow 2.0 will prioritize “eager execution,” a mode of operation that’s, well, more like PyTorch.

TensorFlow has a much larger ecosystem, with important supporting tools like the excellent Keras, a simplified API that makes TensorFlow much more consumable, and TensorBoard, an impressive visualization tool that makes it easy to plot a wide variety of model metrics.

The PyTorch ecosystem isn’t standing still though.

The fastai library, for example, which aspires to play for PyTorch a role analogous to Keras, just announced version 1.0 at the PyTorch event. Also announced at the conference—Arm, Nvidia, Qualcomm, and Intel are adding PyTorch support for kernel integrations for better hardware support. All the major cloud vendors made supporting announcements as well, with AWS announcing SageMaker support for PyTorch 1.0 images and distributed PyTorch training; Microsoft announcing PyTorch integration with Azure, Azure ML and VS Code, as well as a host of community investments; and Google Cloud announcing initial Kubeflow and TensorBoard support for PyTorch as well as TPU support.

Beyond its support for PyTorch development, project sponsor Facebook is also investing in community growth. For example, the company announced the PyTorch Scholarship Challenge, a new initiative that will make 10,000 seats available in the forthcoming “Introduction to Deep Learning with PyTorch” course on Udacity.

My take: I’m a big fan of PyTorch’s approach to deep learning and of the fastai library. It’s clear these two have a lot to offer the deep learning research community, where they’re already making inroads. What’s less clear is the impact for businesses and enterprise users.

While I don’t believe Google offers a formal commercial support program for stand-alone TensorFlow, customers using TensorFlow in conjunction with a supported GCP product like GCE, Cloud ML Engine or Cloud TPUs can get support via those channels, and enterprises with a relationship with Google Cloud can tap their existing support resources for assistance. In short, they can get adequate commercial support.

Google counts companies as diverse as Coca-Cola, Airbus, Bloomberg and Baker Huges GE as TensorFlow users. It’s unclear how a business could get support for PyTorch today, though if the framework’s star continues to rise I’m sure someone will step up to offer this. Until that time, having a strong competitor in the space in PyTorch will only benefit TensorFlow users by driving continued innovation.

Note: My friend Darryl Taft asked for my take on the PyTorch 1.0 announcement for an article he was writing for TechTarget. That lead me to pull together my initial thoughts on the topic, which I’ve expanded upon in the above.

You can check out Darryl’s article here.