My recent conversation with Sergey Levine—Associate Professor at UC Berkeley, co-founder of Physical Intelligence, and frequent guest on the podcast—discussed the robotics startup’s recently open-sourced π0 model. This open-source foundation model for robotics combines a vision-language model with diffusion-based action expertise—a great example of the kind of integration driving progress across the field. If you’re interested in robotic learning, the role of synthetic data, or how advanced tokenization can enables more efficient robots, I recommend giving this episode a listen.

“In robotics, every time you want to tackle a new robotics application, you have to start an entire company around it or you have to start an entire research lab. So each robotic application is just an enormous amount of work. And if we could have these general purpose models that can serve as the foundation for a huge range of applications, that would actually allow us to get robots to the next level. It would get us the kind of generalist robots that we see in science fiction basically.”

Sergey Levine, Associate Professor, UC Berkeley and co-founder of Physical Intelligence in 🎙️ π0: A Foundation Model for Robotics

The episode is particularly timely in light of the remarkable progress we’ve seen in robotics over the past year, with several firms pushing the boundaries of embodied AI.

- A year ago Figure AI demonstrated the result of its OpenAI collaboration, showing the Figure 01 robot responding to natural language commands and reasoning about real-world objects and scenarios. Earlier this year the company split with OpenAI and announced Helix, a vision-language-action model for generalized humanoid control.

- Google DeepMind recently unveiled Gemini Robotics, which brings the multimodal Gemini 2.0 model to the physical world. The ER version of the model (embodied reasoning) will offer advanced capabilities including perception, state estimation, spatial understanding, planning and code generation, and in-context learning.

- At its GTC conference two days ago, NVIDIA announced its own Isaac GR00T N1 foundation model for robots. The model features a dual-system “fast and slow thinking” architecture, combining vision-language reasoning with high-speed, reflexive actions. The initiative also includes supporting frameworks like GR00T Blueprint for synthetic data generation and the Newton physics engine, and making the GR00T N1 dataset available to researchers and developers.

- And just yesterday, Boston Dynamics’ latest Atlas demo showed how reinforcement learning could complement motion capture to enable complex movements like breakdancing and gymnastics—highlighting how ML technologies are enabling more dynamic learning.

While the progress demonstrated by these projects is exciting, it’s wise to approach robotics demos, especially polished videos, with a degree of healthy skepticism. As recent articles from outlets like MIT Technology Review and TechCrunch highlight, demos often showcase carefully curated best-case scenarios rather than the full picture of current capabilities. (Thanks to reader Tim S. for sharing these references.)

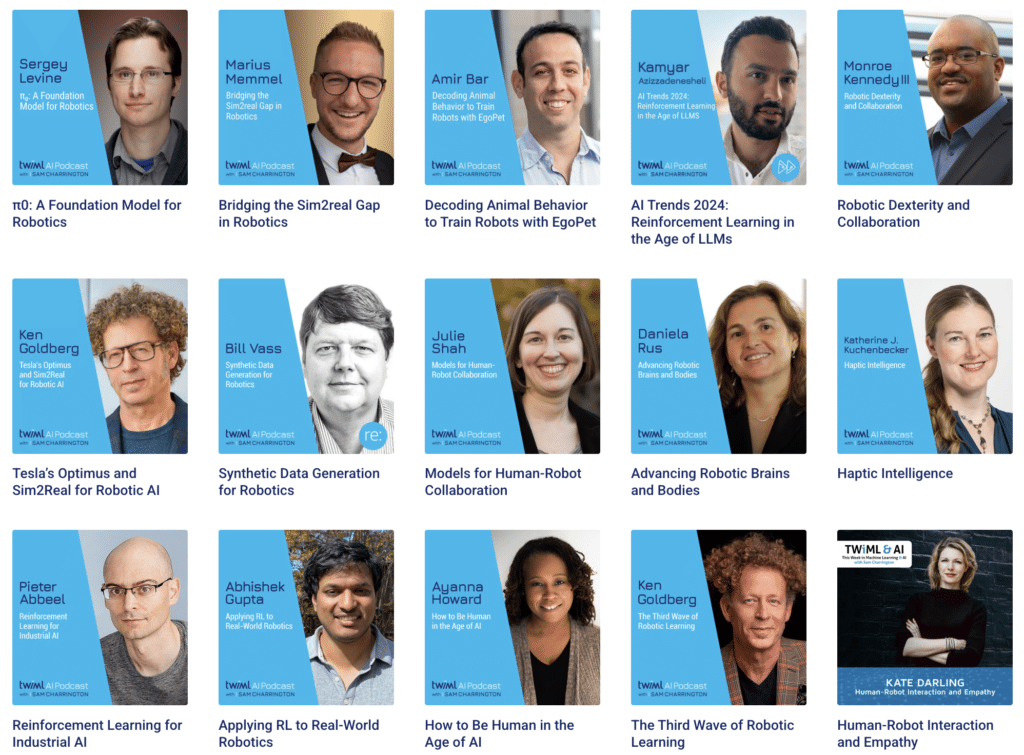

We’ve covered the latest advances in the field of robotics for years, and have enough episodes on the topic to keep even the most enthusiastic listener busy. To learn more, check out our Robotics topic page.