Five years ago, I published the Definitive Guide to Machine Learning Platforms. Looking back, it was a pivotal time: enterprises were starting to scale up efforts to build predictive AI models, while the tooling and best practices needed to reliably move models from the lab into production were just beginning to emerge.

Through conversations on the TWIML AI Podcast and beyond, I encountered a theme among early ML adopters: they weren’t just hiring data scientists; they were investing heavily in platforms and processes to make those teams productive and their models reliable.

Those observations directly inspired the Definitive Guide, which set out to map the emerging landscape for technology leaders navigating these new challenges. My goal was to distill these evolving best practices and platform strategies into a resource for enterprise leaders.

Today, a platform-centric mindset is more important than ever—but it also comes with greater risks. Generative AI (GenAI) innovation has accelerated so dramatically that a system built today could become outdated in a matter of weeks, or even days, as new models with expanded capabilities are released. AI leaders know they need platforms to achieve speed, scale, reliability, and compliance, but they worry about engineering against such a fast-moving target.

That pace—and the uncertainty it creates—forces enterprise technology leaders to confront new questions. What core principles still apply? Where do old assumptions break down? What matters in designing platforms for GenAI, and what’s just noise? How are industry leaders tackling these challenges?

While the foundational ideas of the Definitive Guide—the need for process, automation, repeatability, and enabling infrastructure—remain highly relevant, the explosion of GenAI and Large Language Models (LLMs) has changed the landscape. Today, those ideas are being stress-tested and reframed as organizations adapt to a new technology landscape.

“We say Capital One is a tech company that does banking. Part of being a tech company is to have a platform mindset and a strong data culture. As we advance in our AI journey, we’re taking a very similar platform-centric approach. For GenAI, for example, we’re building all of those AI capabilities in a way that the rest of the company can build on and leverage… When you have central platforms, you can apply a lot of the governance and controls in one place rather than having a federated set of controls everywhere.”

Abhijit Bose, Head of Enterprise AI and ML platforms at Capital One in 🎙️ Evolving MLOps Platforms for Generative AI and Agents

What should AI tools, platforms, and workflows look like in 2025 to support this new world?

Drawing clear lines between the “MLOps” world for predictive AI and the emerging “GenAIOps” or “LLMOps” practices isn’t straightforward.

Why? Because “Generative AI” covers more ground than the predictive modeling that preceded it. Today’s systems span everything from simple API wrappers over commercial LLMs to custom tuned open-weights models, to intricate multi-agent pipelines that blend retrieval, tool use, and reasoning. Some rely entirely on third-party endpoints; others need infrastructure for fine-tuning, hosting, or orchestrating bespoke models. And they operate on a wide range of modalities—text, image, audio, code, and more. The net result: new patterns and approaches are emerging at a speed that makes it hard for even experienced teams to keep up.

We’re at a similar stage with the industrialization of generative AI today as we were with traditional ML in 2019. The core technology is potent, the potential is immense, but the robust, repeatable practices and tooling for enterprise-scale deployment are still evolving.

This post starts a series revisiting the Definitive Guide concepts, updating them for the GenAI era. We’ll explore the changing workflows, challenges, and required platform capabilities, and what these shifts mean for enterprise technology leaders and AI practitioners.

GenAI Model Training vs. System Development

To discuss tooling and platform requirements for GenAI, we need to define what we mean by GenAI—or at least clarify its scope for our current purposes. A useful first-order distinction is that training and fine-tuning GenAI models is a distinct workflow from building GenAI applications or systems:- GenAI Model Training: This includes training a new model from scratch as well as fine-tuning an existing one. Training GenAI models follows a workflow similar to the traditional MLOps process. It begins with collecting a training dataset, moves to a rigorous experimentation and training phase, and ends with a deployment phase where models are delivered to users and managed throughout their lifecycle. Delivery can mean delivering code, weights files, and other artifacts, or hosting the model behind a live endpoint for inference. Most MLOps principles apply, but at a larger scale.

- GenAI Application/System Development: Here’s where things diverge and where much innovation lies. This category involves building applications or systems that take advantage of LLMs and other foundation models. GenAI systems can range in complexity from a “wrapper” around simple LLM calls to chained prompts, agentic workflows, Retrieval-Augmented Generation (RAG), or other advanced techniques. In any case, system development is less about training the model and more about orchestrating its effective and reliable use.

Since more projects will involve building on existing models rather than training or fine-tuning new ones, we’ll focus on GenAI application development in this post.

Who | What | |

|---|---|---|

GenAI Model Training | ML Engineers, Research Scientists, Data Scientists | Training or fine-tuning generative models |

GenAI Application/System Development | AI Engineers, Software Developers | Building applications or systems around existing models |

From MLOps to LLMOps

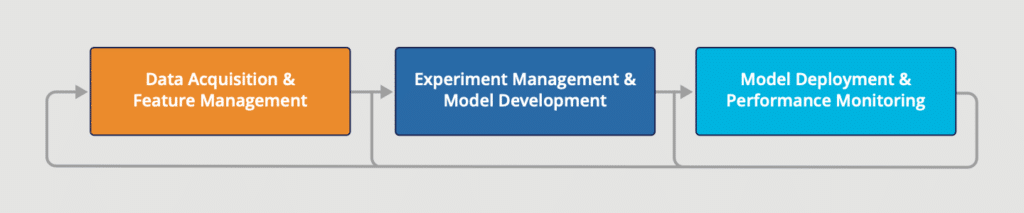

The original Definitive Guide characterized the ML process using the high-level flow depicted below.

While the overall process must evolve to fully support GenAI, we can begin to unpack how by breaking down what’s changing at each step.

Data Acquisition and Preparation

Data is key for any AI project, whether you’re training a new model or building on someone else’s. However, excluding model training and tuning, the scale needed for GenAI applications is typically much smaller.

The nearest analogy to the training dataset used in model training for GenAI projects is the evaluation dataset. This is the set of user or LLM inputs and expected responses used to measure the system’s performance as it evolves. (Note: If you’re working on an LLM development project and don’t think you have an eval set, you’re wrong; you’re just inventing it on the fly as you test your app and using “vibes” as your evaluation metric.)

Synthetic data or data generation is often used to create the evaluation dataset, just as it has been increasingly used to create training data for models.

Eval tools help manage evaluation datasets and run them against the application to support ongoing improvement.

Besides evaluation, other data sources for developing GenAI app systems include knowledge documents, databases, and embeddings for retrieval-based systems (RAG), often stored in vector databases.

Experimentation and Model Development/Training

In the GenAI application development scenario, the core loop isn’t experimenting with model hyperparameters and training runs. Instead, it is iterative prompting and system design. AI engineers experiment with different models and prompts, chaining strategies, retrieval methods, and system configurations to achieve their goals.

Success is measured by running evaluation sets and metrics against the system to assess quality, tone, safety, and factual accuracy.

When developing LLM-based applications, observability tools are analogous to experiment management tools used in predictive model development. These improve on standard logging to allow developers to trace the data and response flow within the application, providing insights into system behavior.

Model Management, Deployment & Operations

In GenAI applications, model serving is typically handled by a third-party API offered by the model provider. Consequently, a significant part of “Ops” shifts towards managing the application’s interactions with the models it uses:

Scalable logging is the foundation of full-lifecycle observability. While the LLM-specific tools preferred during development can address this need, once an application is deployed to production and operating at scale, logging to an organization’s preferred destination, such as syslog, Elastic, or via an OpenTelemetry collector, is often preferred.

Monitoring tools can help track low-level metrics like latency and downtime, as well as metrics like cost and token usage, at both the application and API levels. They can also help filter model inputs or outputs for toxicity or bias and identify problematic user interactions. Dedicated guardrails tools take this one step further, enforcing enterprise or industry constraints, ensuring safety, preventing prompt injection, managing tone, and validating response structure.

As modern GenAI systems become more complex, testing approaches must evolve beyond the simple drift detection algorithms used with predictive AI. GenAI testing platforms help users track model performance, check system robustness against edge cases and adversarial inputs, and quickly identify the cause of degraded user experiences.

Looking Ahead

This is just the first step in unpacking the evolution from traditional MLOps to robust, enterprise-grade Generative AI practices. While core principles persist, the tools, techniques, and focus areas are shifting for those building complex GenAI systems. I’m excited to explore these areas in future posts and on the TWIML AI Podcast.

Are you building or deploying GenAI systems? What challenges are you facing? What’s your LLMOps stack? I’d love to hear from you—email me (sam@) or find me on X or LinkedIn.