A Deep Dive into Google’s Evolving Agent Strategy and Platform Direction

Another Google Cloud Next is in the books. There was a lot of news to digest from the event – 229 announcements according to the official count, spanning platform technologies, models, infrastructure, customer wins, and more. I’ll cover the most important of these for AI leaders and builders in this post, but before diving into specifics, a bit of context is helpful.

Last year, Google Cloud was early and bold in recognizing the potential of AI agents, articulating a compelling vision at Next 2024 for how they could reshape enterprise software.

Google Cloud’s Agentic Vision from Next ‘24

The centerpiece of this strategy was the Vertex AI Agent Builder, announced at the event. At the time, I wrote:

Vertex AI Agent Builder was the one of the splashiest and buzziest announcements from Next ’24. The Agent Builder is a tool designed to help developers more easily create Agent experiences like the ones demonstrated in the keynote. A good way to think about the Vertex AI Agent Builder’s capabilities is that it lets users build an agent that can take advantage of tools, data sources, and other subagents to fulfill user requests in a given domain. Agent Builder offers a studio-style (low code) environment for Agent building and configuration, along with tools for Agent testing and monitoring.

However, my excitement was tempered. I closed that post with a reflection on the “product debt” Google Cloud was taking on:

And then there’s product. The Agent vision rolled out at Next required a large charge on the credit card Google Cloud has taken out with the Bank of Customer Trust. Sure, they didn’t finance the whole vision—the Models are state of the art, the Agent Builder is a respectable start, and many of the other pieces are in place—but these are early days for these products and rough edges are not hard to find once you start using them. To pay down this debt, Google Cloud will need to buckle down and deliver on a ton of product, integration, documentation, and education grunt work to enable customers and partners to have a shot at successfully building and deploying their own AI Agents. And the temptation to charge more shiny new things isn’t going anywhere.

This turned out to be prescient. Shortly after Next ‘24, I, along with several members of the TWIML AI community, dug into Agent Builder to see what we could build. We quickly encountered the rough edges I’d anticipated. The Agent Builder product delivered last spring proved to be an agent-focused makeover of Google’s existing Dialogflow CX product. While thematically aligned with a chat-based vision of AI agents, it turned out that Agent Builder struggled as a practical framework for implementing even moderately complex agentic workflows. I shared this feedback with the Vertex team early on, and it seems we weren’t alone.

To their credit, the team heard this feedback and went back the drawing board. They recognized that their initial take on agents wouldn’t adequately support where the market was heading or meet the needs of developers looking to build sophisticated agent-based systems. It certainly helped that over the past year many teams at Google began building their own agentic applications – like NotebookLM and Agentspace – so they had significant real-world experiences to draw on as they built out the next-generation Agent Builder.

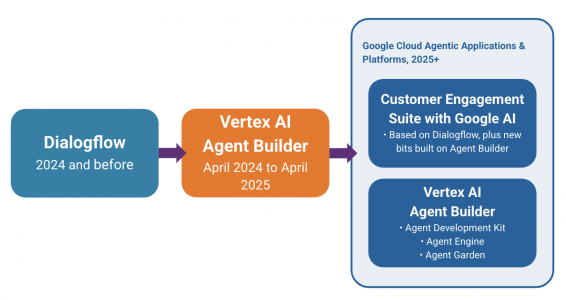

Vertex AI Agent Builder Evolution: The Dialogflow-based tooling evolved into the Customer Engagement Suite, while a new, developer-focused Agent Builder emerged based on ADK, Agent Engine, and Agent Garden.

Through this lens, several of the most interesting Vertex AI announcements at Next ‘25 represent the culmination of this significant undertaking and a substantial payment against the debt I called out last year.

Let’s unpack the Agent Builder updates and other key AI announcements from this year’s event.

Agents Reimagined: The New Vertex AI Agent Builder

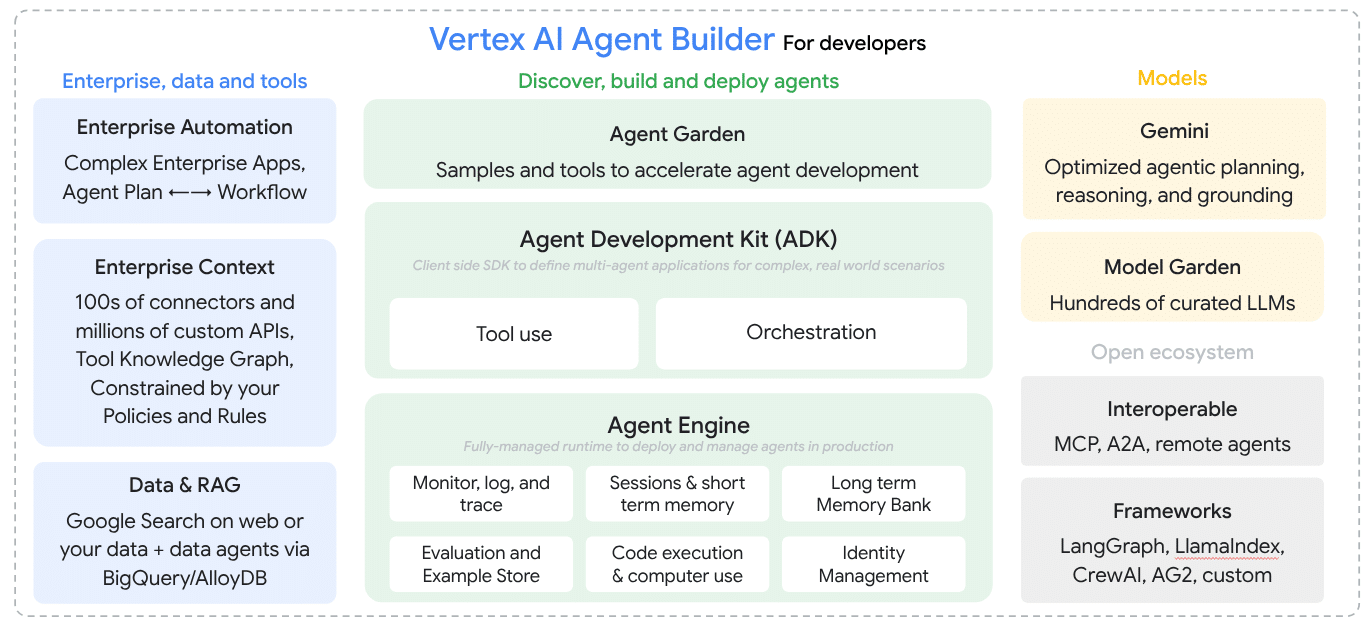

As noted above, the most significant shift in the Vertex AI platform portfolio is the welcome overhaul of the builder experience for AI agents. Instead of the low-code and inflexible Dialogflow-based approach, Agent Builder is now a rich , code-first suite of tools designed for building more complex, programmable agents.

An overview of the new Agent Builder portfolio

The new Agent Builder consists of the following key components:

1. Agent Development Kit (ADK)

The ADK is a new open-source framework for building agentic applications that offers the code-first flexibility that developers were missing from the first-generation Agent Builder. It provides constructs for orchestration, tool use (including native MCP support), session state and memory, and supports agents of different types (LLM agents, workflow agents, or custom agents), which can be composed into multi-agent systems. The approach and APIs are similar to contemporary agent frameworks like LangGraph, CrewAI, and AutoGen. Google provides a migration path from these tools by allowing developers to use LangGraph and CrewAI tools from within ADK agents out-of-the-box. Google would like you to run ADK agents on Vertex AI Agent Engine, discussed next, but they can be run anywhere you can spin up a Docker container. The ADK is in Preview. To learn more about it, check out the docs on GitHub.

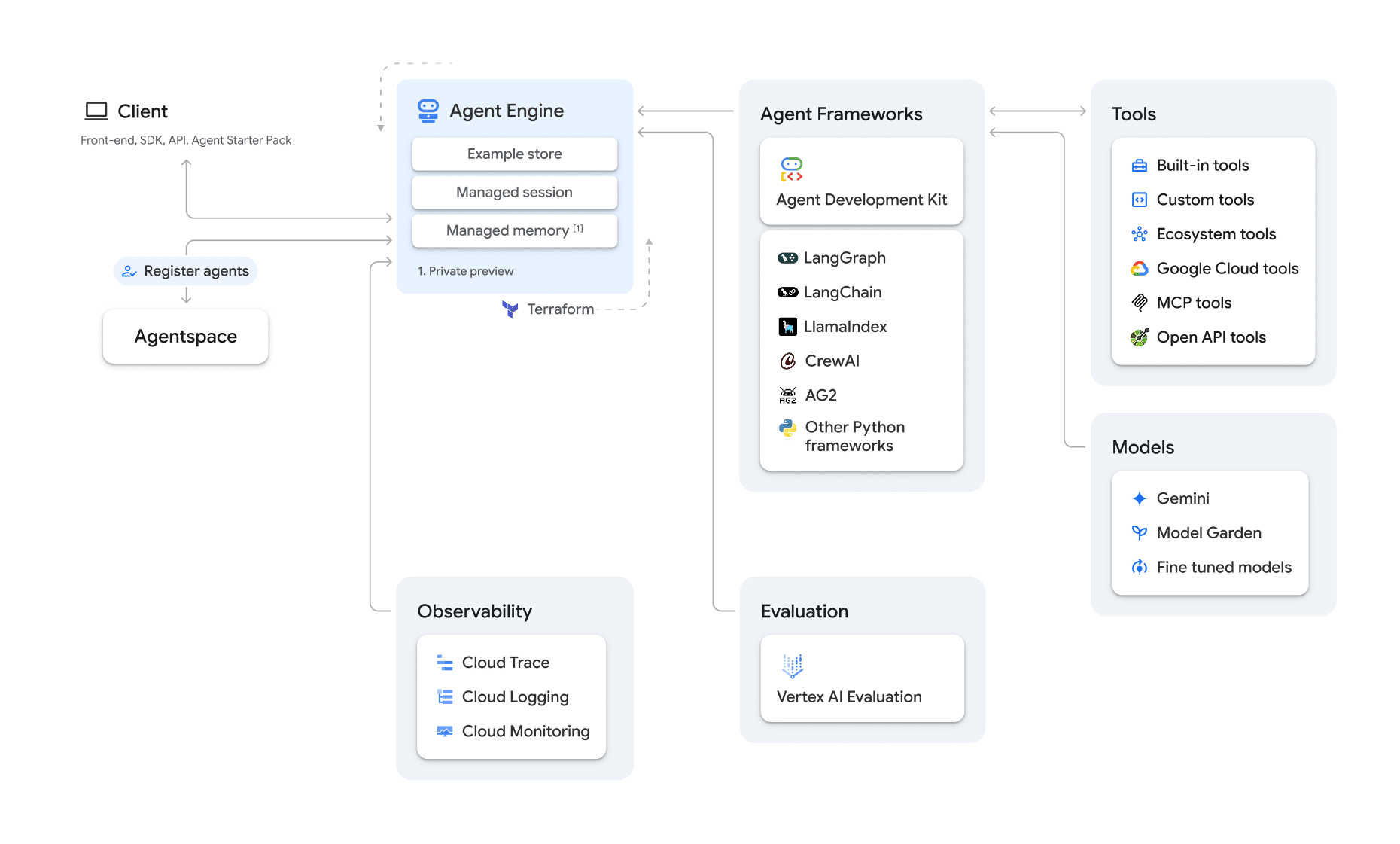

2. Agent Engine

This is the product formerly known as “LangChain on Vertex AI,” and later “Vertex AI Reasoning Engine,” which was introduced last year as a way to allow LangChain developers to more easily take advantage of Google Cloud services and APIs when building LLM applications. Agent Engine is now positioned as a fully managed agent runtime that simplifies deploying, scaling, evaluating, managing, and monitoring agents in production. Think “AgentOps.” It promises integration with Google Cloud services like logging, tracing, and security (IAM and VPC Service Controls), effectively making ADK agents first-class citizens within the broader GCP environment. Deploying an agent via Agent Engine is essentially spinning up an Agent Engine compute instance, and users pay hourly for the instance’s compute and memory requirements (currently $0.0994/vCPU-Hr and $0.0105/GiB-Hr respectively). It’s unclear what the underlying Compute Engine resource type is, so it’s difficult to say how much of a premium users are paying for the Agent Engine runtime. Agent Engine is in Preview. More in the docs.

Vertex AI Agent Engine provides a runtime environment for agents that is easily integrated with a variety of Google Cloud and 3rd party services and APIs.

3. Agent Garden

Analogous to the existing Model Garden, but for agents, Agent Garden is a repository for discovering, sharing, and reusing pre-built agent patterns, tools, and potentially full agents built by Google, partners, and the community. Many of the most interesting features are “coming soon.” As of the time of this writing, the Agent Garden primarily consists of links to agent samples on Github and a catalog of tools for allowing agents to interact with Google and third-party systems.

Note, the conversational AI capabilities derived from Dialogflow haven’t disappeared; they’ve been rebranded and enhanced as the Customer Engagement Suite with Google AI. This targets use cases like customer service bots and virtual agents, incorporating features like human-like voices, emotion understanding, and no-code interfaces. Some CES features are now built on top of the underlying ADK.

Expanding the Agent Ecosystem: A2A and Agentspace

Beyond the core builder tools, Google introduced two other major initiatives aimed at fostering broader agent adoption:

Agent2Agent (A2A) Protocol

This new open protocol aims to enable different agents, potentially built by different vendors or teams, to communicate and collaborate. Google enlisted over 50 partners, including heavyweights like Salesforce, SAP, ServiceNow, and Oracle, to throw support behind A2A. The official line is that A2A is complementary to the Model Context Protocol (MCP), and is focused on handling stateful, potentially bilateral communication and negotiation between agents, while MCP focuses on stateless tool/data access. (They even have a page titled “A2A ❤️ MCP” to drive this point home).

My initial take on A2A was one of “cautious pessimism,” but I remain open to the possibility that this solves real problems for real use cases for real developers. A2A is not the first proposal for inter-agent rules of engagement, but it’s the one that has garnered the most interest to date. I hope to dig into this space a bit more deeply sometime soon.

A2A is politically vs technologically motivated. If you look at the partners list, the backroom conversation is clear. Salesforce, SAP, Workday and Oracle don't want to be anybody's "tool". They own the data and the workflow and are building out their own agents on their own… https://t.co/uUl9ZlFLlH

— Sam Charrington (@samcharrington) April 9, 2025

Agentspace

First previewed late last year, think of Agentspace as a “corporate portal for the agentic era,” or a hub for all your (forthcoming) enterprise agents. Agentspace integrates enterprise search (via custom connectors to internal systems) with the ability for employees to discover, use, and even build their own agents.

Google envisions a continuum where users can start to build agents via just prompting (think of Custom GPTs in ChatGPT), evolve them using a visual designer (e.g. a Zapier-style builder or the new Workspace Flows), and eventually export them to code (i.e. ADK/Agent Engine) for robust deployment. This directly addresses the workflow many builders intuitively follow.

I have to admit, I find this vision compelling: democratizing agent creation, discovery, and use within an organization, with an evolutionary path from prompting to code!

The catch? Agentspace currently requires significant setup (e.g. connectors, identity provisioning, etc.) and as a result is targeted primarily at large enterprises with thousands of seats that can justify the heavy on-boarding commitment. While uptake sounds strong according to Google (surprisingly most early customers are NOT Workspace users), self-service access isn’t here yet so opportunities to explore this hands-on are limited for most. I’m hoping to see this open up, perhaps even by Google I/O in a few weeks.

Model Advancements and Platform Optimization

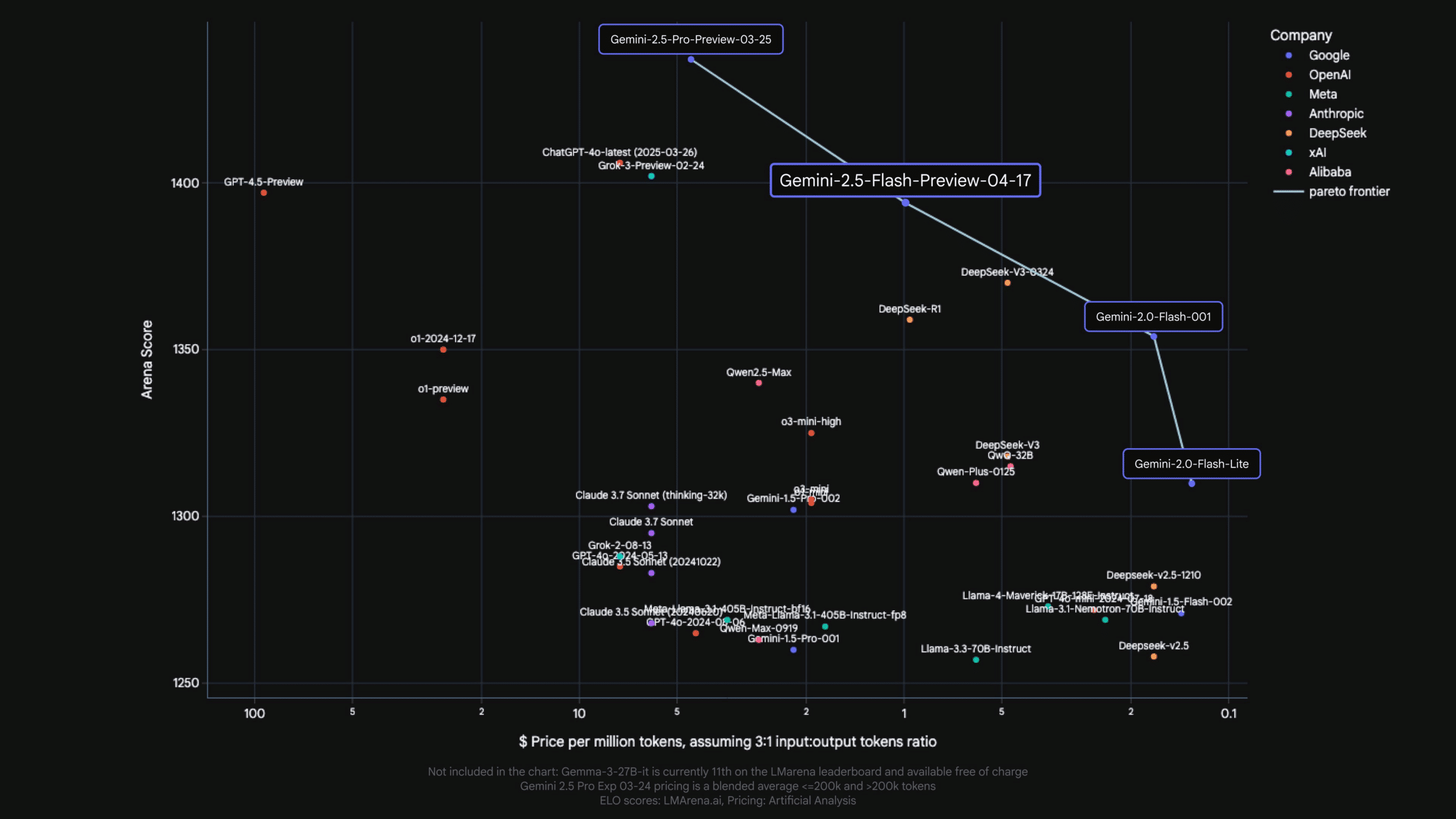

This year, Google arrived at Next in a very strong position, with its Gemini family of models effectively dominating the Pareto frontier of LLM cost versus quality. In other words, at any given price point, a Gemini model offers the highest performance (as measured by LMArena scores), and at any given performance level, a Gemini model is the most cost-effective option.

Gemini models on the price-performance Pareto frontier

The company used the opportunity to announce the Flash variant of its popular Gemini 2.5 model series, in addition to a variety of feature and availability updates to its generative media models including Imagen 3 (image generation/inpainting), Chirp 3 (audio/custom voice), Lyria (text-to-music), and Veo 2 (video generation/editing). Third-party model support grew with Meta’s latest Llama 4 and the Ai2 (Allen Institute for AI) family of open source models including OLMo, Tulu, and Molmo becoming available via the Vertex AI Model Garden.

Recognizing that model proliferation can be complex, Google introduced Vertex AI Model Optimizer – a single endpoint that routes requests based on user preference (i.e. prioritize cost, prioritize quality, or balance the two) to the most appropriate underlying model. This, combined with the Vertex AI Global Endpoint (providing capacity-aware routing), starts abstracting away model selection and infrastructure management for users.

In addition, the company announced enhancements to Vertex AI Dashboards and its model training and tuning capabilities.

Also interesting is a new feature allowing customers to ground AI agents with Google Maps, so they can provide responses with geospatial information tied to places in the U.S

Supporting Infrastructure, Data, and Developer Tools

Underpinning the AI advancements were updates across the stack:

- New Accelerator Support: The 7th gen “Ironwood” TPU was announced for inference, NVIDIA Blackwell GPUs (A4/A4X instance types) arrived, and future Rubin GPUs were promised.

- Gemini in your Private Cloud: Google is bringing Gemini to Google Distributed Cloud, its fully managed software and hardware solution for data centers and edge locations. They are partnering with NVIDIA to provide Blackwell support in GDC.

- Distributed Computing: Pathways (DeepMind’s distributed runtime that powers all of Google’s internal AI workloads) is coming to Google Cloud. vLLM now supports TPUs. Dynamic Workload Scheduler adds new instance and accelerator support.

- Data & Databases: AlloyDB AI gained enhanced vector search and natural language querying. Firestore added MongoDB compatibility. BigQuery continued its integration with Vertex AI models, added semantic search, improved data pipelines, and embraced Apache Iceberg.

- Developer Tools: Gemini Code Assist gained agentic capabilities for tasks like migration and testing. Gemini Cloud Assist expanded its integration across GCP services for troubleshooting and optimization. Firebase Studio emerged as a new cloud-based IDE focused on full-stack AI app development, integrating Project IDX, Genkit, and Gemini agents to go after the likes of v0, Lovable, and Bolt.

Customer Momentum

Google showcased hundreds of customer stories at this year’s event, moving beyond early generative AI exploration to demonstrate real business value. Highlights included:

- Citi: Deploying generative AI productivity tools to over 150,000 employees.

- Deutsche Bank: Using Gemini in “DB Lumina” to synthesize financial data for traders.

- The Sphere: Collaborating with Google DeepMind and Cloud to power elements of its immersive Wizard of Oz experience with AI.

- Wayfair: Updating product attributes 5x faster with Vertex AI.

- Mercedes Benz: Enhancing customer interactions with AI-powered recommendations and search (mentioned at Next ‘24, progress continues).

- Verizon: Enhancing customer service using the Customer Engagement Suite.

Together, these stories illustrate a broader transition I’m seeing in the industry as organizations move from experimenting with generative AI to deploying high-value use cases, and signal that Google Cloud’s role as a partner to its customers is maturing—with its platform increasingly underpinning production enterprise solutions.

Key Takeaways

This year’s Next saw Google Cloud once again, showcasing its comprehensive AI strategy, marked by more robust agent tooling, deeper platform integrations, and a growing focus on simplifying the developer experience.

- Agent Strategy Matures: Google has significantly course-corrected its agent strategy, moving from a limited Dialogflow-based approach to a more robust, developer-centric model with the ADK, Agent Engine, and Agent Garden. This is a major step in delivering on the vision it laid out last year and paying down last year’s product debt.

- Focus on Ecosystem: While ADK provides a strong first-party foundation, Google is actively trying to integrate with and support other frameworks (LangChain, LangGraph, AutoGen, LlamaIndex) and protocols (MCP), positioning Vertex AI as an open “AgentOps” platform. The A2A protocol aims to extend this and brings broad partner support, though its practical impact remains an open question.

- Platform Abstraction: Tools like Model Optimizer signal a move towards simplifying the developer experience by abstracting underlying model choices and infrastructure complexities.

- Full Stack Integration: Google continues to leverage its strengths across foundation models (Gemini and the media models), infrastructure (TPUs and cloud computing), data platforms (BigQuery, AlloyDB), and developer tools (Gemini Assists, Firebase Studio) to create a modern, integrated AI development and deployment environment. (But importantly, one in which you can pick and choose the pieces that serve your teams and use cases.)

- Enterprise Traction: Customer stories are evolving from “we’re exploring GenAI” to “we’re deploying GenAI agents/tools for specific business outcomes.

Opportunities and Challenges

While progress is evident, challenges remain:

- Product Identity Churn. While iterating quickly, Google Cloud exhibits a tendency towards frequent product rebranding and restructuring. Dialogflow → Agent Builder → ADK, Agent Engine, and Customer Engagement Suite is one example. LangChain on Vertex AI → Vertex AI Reasoning Engine → Agent Engine is another, as is Project IDX plus some GenAI bits → Firebase Studio. This constant shuffling of product identity, even if strategically sound internally, imposes a cost on users who must constantly relearn the platform’s organization and map old knowledge to new names and structures (and docs!). It also feeds into a perception of Google as a capricious steward of user trust that goes back many years.

- Complexity Persists: Despite efforts like Model Optimizer, the sheer number of new tools, models, and concepts (ADK, A2A, Agentspace, media models, etc.) presents a steep learning curve. Effective education for users, partners, and internal staff is crucial, as are clearer product narratives that tie together many disparate pieces into clear pathways to value.

- New Product Immaturity: While the foundations for agents are more solid (ADK), the supporting ecosystem components like Agent Engine, Agent Garden, and even Agentspace itself are still new or “coming soon.” Delivering robust, usable versions will be key. The shift from the old Agent Builder represents significant progress, but the new offerings now need time to mature. That “credit card with the Bank of Customer Trust” I referenced last year is clearly a revolving line: Google made real payments on its product debt this year, but also racked up new charges.

- A2A vs. MCP: The introduction of A2A creates potential confusion and fragmentation. Google needs to clearly articulate the distinct value proposition and ensure developers aren’t burdened by unnecessarily supporting multiple similar protocols. Success will depend on whether A2A solves real technical problems beyond satisfying large vendor politics.

- Agentspace Go-to-Market: The current sales-led, enterprise-focused approach for Agentspace limits broader adoption, experimentation, and feedback. Opening up self-service access, even for basic tiers, would accelerate adoption and provide valuable insights.

Conclusion

Google Cloud Next ‘25 showcased a company hitting its stride in the generative AI era. The ambitious agent vision laid out last year is being rebuilt on a more solid, developer-friendly foundation with the new Vertex AI Agent Builder suite (ADK, Engine, Garden). This represents significant progress in addressing the product debt I highlighted previously. Initiatives like Agentspace and A2A demonstrate a continued push to build a comprehensive agent ecosystem, though questions about execution and market fit remain for these newer elements.

Google is delivering across the stack, from silicon to sophisticated models and application-level tooling. The critical challenge remains execution in this fast-moving space – specifically, maturing new offerings, simplifying complexity for users, and demonstrating the practical value of concepts like A2A and the Agentspace vision.

I’ll be watching closely as Google I/O approaches next month for updates on product availability and strategic direction. Of course, Google I/O is also the venue for the kind of significant model announcements that reshape the landscape, adding another layer of anticipation. Let’s connect if you’re planning to attend.

Heading to #GoogleCloudNext ✈️ in Las Vegas. Tried a new use case for Gemini 2.5 Pro on the plane and my mind is blown! 🤯

— Sam Charrington (@samcharrington) April 9, 2025

Analyst schedules from AR teams are gold, but getting those packed PDF grids 📄 into my calendar 🗓️ for easy reference used to be hours of tedious manual… pic.twitter.com/7tqir8WAXF

"AI 1.0 is Cloud 4.0"

— Sam Charrington (@samcharrington) April 9, 2025

Great quote by @GrannisWill at the #GoogleCloudNext Analyst Summit kickoff, speaking to the importance of data and compute scale to #genai innovation, multimodal, agents, etc.

w/ @s_look @googlecloud pic.twitter.com/sYIZ3EngDi

.@googlecloud CEO @ThomasOrTK taking the stage to get his #googlecloudnext 2025 keynote kicked off. "Google is building for a unique moment." Let's go! pic.twitter.com/EdXszDr0B7

— Sam Charrington (@samcharrington) April 9, 2025

A bit of a victory lap for Gemini 2.5 Pro. #GoogleCloudNext @googlecloud pic.twitter.com/xTCDlmZFjK

— Sam Charrington (@samcharrington) April 9, 2025

McDonalds using edge compute and AI to make store ops more agile, intelligent. #GoogleCloudNext @googlecloud pic.twitter.com/UfGaNYDdnl

— Sam Charrington (@samcharrington) April 9, 2025

24x higher intelligence per dollar, vs other models including DeepSeek - Amin Vadhat #GoogleCloudNext @googlecloud pic.twitter.com/HIobgOfVvS

— Sam Charrington (@samcharrington) April 9, 2025

Gemini now available for air-gapped deployments #GoogleCloudNext @googlecloud pic.twitter.com/v6pevBrPLT

— Sam Charrington (@samcharrington) April 9, 2025

.@GoogleCloud adding to family of task-specific models pic.twitter.com/LC2Q3KZbkJ

— Sam Charrington (@samcharrington) April 9, 2025

Citigroup CTO David Griffiths on Citi AI journey. Focus now is scaling AI horizontally and vertically across the business. Expect to see agents in production this year. pic.twitter.com/8pKakgK8qf

— Sam Charrington (@samcharrington) April 9, 2025