I recently returned from the Google Cloud Next ’24 conference, held in Las Vegas in April. Walking into the MGM conference center for the event, I couldn’t shake the feeling that I had only just left last year’s Next. Next ’23 was, in fact, only nine months before.

Despite the accelerated timeframe between editions of this ‘annual’ event, the Google Cloud team put on an impressive show. Next ’24 welcomed twice as many attendees as the 2023 conference, effectively returning attendance to pre-pandemic levels. Attendees had twice as many sessions to choose from this year. And, those of us following Google Cloud’s progress had our hands full this year. The company made 35% more announcements this year, for a total of 218.

Next by the Numbers | 2023 | 2024 |

|---|---|---|

Attendees | 15,000 | 30,000 |

Breakouts | 250+ | 500+ |

Announcements | 161 | 218 |

Read on for my key takeaways and reflections from the event.

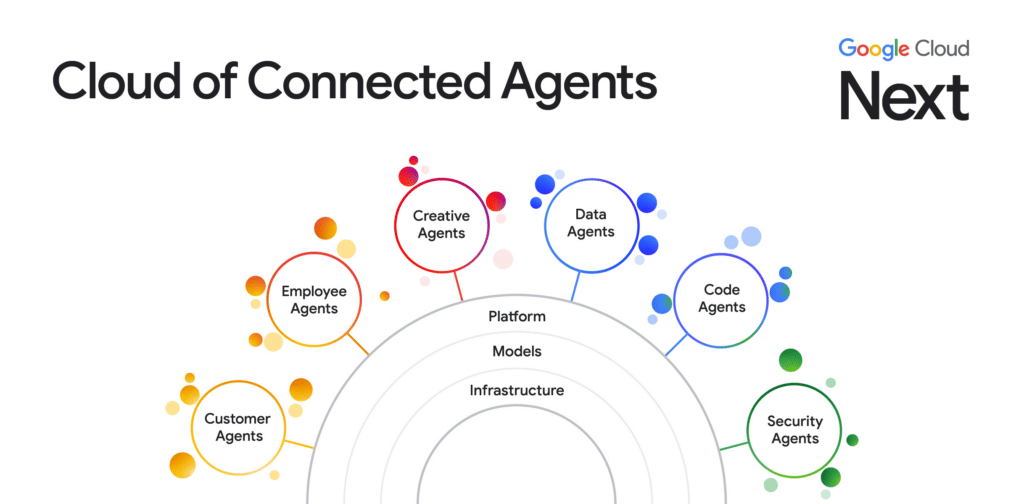

Agents, Agents, Agents

AI continued to be very much a core theme at this year’s Next. AI news, updates, and customer stories dominated substantially all of Google Cloud president and CEO Thomas Kurian’s hour-and-forty-minute keynote presentation. For much of the keynote, the announcements came in such quick succession, it was difficult to keep up with it all.

Like last year, generative AI (GenAI) was a key focus at the conference. This time around, however, Google Cloud seized the opportunity to present its bold new vision of AI Agents as the future of enterprise generative AI.

Vertex AI Agent Builder

Vertex AI Agent Builder was the one of the splashiest and buzziest announcements from Next ’24. The Agent Builder is a tool designed to help developers more easily create Agent experiences like the ones demonstrated in the keynote. A good way to think about the Vertex AI Agent Builder’s capabilities is that it lets users build an agent that can take advantage of tools, data sources, and other subagents to fulfill user requests in a given domain. Agent Builder offers a studio-style (low code) environment for Agent building and configuration, along with tools for Agent testing and monitoring.

Note: Having spent some time exploring Vertex AI Agent Builder, I have a lot more to say about it. Keep an eye out for a future post on the topic.

Grounding with Google Search and Enterprise Data

This is an announcement I have been anticipating for a year, and I was excited to finally see come to fruition at Next. I don’t really feel it’s received enough attention.

The number one GenAI use case that enterprises are excited about today is some version of retrieval-augmented generation (RAG). This is often described as “chatgpt for my data,” but that’s really just the start. At the core of RAG is the idea that at the time of a query to an LLM (or Agent), a query is done against some data source and the results are provided to the LLM as context for its response. This has the benefit of both incorporating external data unknown to the LLM and limiting the LLMs tendency to hallucinate in generating its responses.

Google Cloud introduced two types of grounding at Next. Grounding with Enterprise Data allows users to ground LLM responses against enterprise Data Sources configured in Vertex AI. And Grounding with Google Search introduces the ability to ground LLM responses using the Google Search knowledge graph. The ability to ground responses against Google Search allows the LLM to incorporate trusted*, up-to-date information found on the web and include relevant citations in its response. This feature could potentially disrupt startups like Perplexity and You.com, which have built their entire companies around this very idea.

*Trusted, to the degree to which Google’s page rank algorithm can confer trust

Vectorizing All the Things

Continuing along the RAG theme, another key product trend evident at Next was the “Vectorization of All the Things.” Google Cloud is focusing on enhancing its various databases and data platforms with the ability to natively create vector embeddings and offer vector search capabilities so that their contents can be more easily integrated into RAG workflows as well as to facilitate and improve search, recommendation, and classification tasks.

BigQuery, AlloyDB, Memorystore for Redis Cluster, and Firestore all received the vectorization makeover at Next.

Vertex AI hybrid search was also announced, integrating vector-based and keyword-based search techniques to enhance its users’ search experience.

LLM Application Developer Tools

Two other exciting announcements for AI engineers and developers include the release of Vertex AI Prompt Management and Vertex AI Rapid Evaluation, both in preview. Together these tools aim to help users deliver reliable LLM applications more quickly by supporting important aspects of prompt engineering.

The Prompt Management tool is focused on allowing teams to more easily track and manage their LLM prompts and prompt parameters, and compare the performance of those prompts and prompt versions against one another. The Rapid Evaluation tool aims to help users quickly asses model performance for a specific set of tasks.

Prompt management and model evaluation are pain points for anyone working with LLMs in a serious way, such as building apps or Agents around them, so these are welcome new capabilities.

Increased Model Availability

While we didn’t get any new foundation models at Next, we did see broader availability of Google’s latest models as well as several new model features.

In particular, Gemini 1.5 Pro—which, at 1 million tokens, is the largest context window LLM—became broadly available at Next as it transitioned to public preview on Vertex AI.

We also saw new Imagen 2.0 features arrive on Vertex, including image inpainting, outpainting, and watermarking capabilities (GA) and the ability to generate short (4-second) animated gifs from text prompts.

Google’s CodeGemma code generation model was also added to Vertex AI.

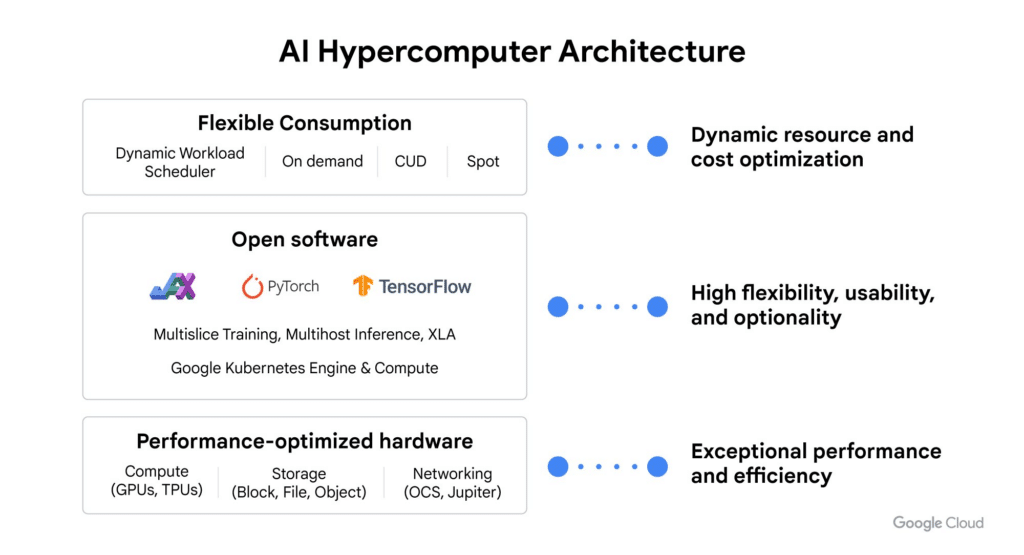

AI Hypercomputer and Infrastructure Advancements

Serverless be damned, all these Agents still need to run someplace, and Google Cloud continued to update its AI infrastructure stack, dubbed the “AI Hypercomputer,” at Next.

Its fifth-gen, performance-oriented TPU, the v5p, is now generally available, and Google Cloud continues to make progress with TPU software support, including greater support for training and serving PyTorch HuggingFace models via Optimum-TPU, support for JAX-based models via MaxText, support for throughput- and memory-optimized LLM serving via JetStream, and comprehensive GKE support for the v5p TPU.

The company also announced new A3 Mega instances powered by NVIDIA H100 GPUs, and confirmed that Nvidia’s new Blackwell GPUs will be available to customers. The latter will be offered in two configurations, one for demanding AI and HPC workloads and another for real-time LLM inference and training at massive scale.

Lastly*, the company announced Google Axion, their first custom Arm-based CPU. Google is targeting general-purpose compute and traditional datacenter workloads for Axion, though folks doing CPU-based model training and inference will surely benefit from the 30% and 50% performance increase over competitive ARM- and x86-based compute instances, respectively.

*By “Lastly,” I mean for this section. There were a lot more infrastructure announcements at Next. For more on the infrastructure announcements, check out Google Cloud’s AI Hypercomputer blog post.

Customer Success Stories

Finally, the conference highlighted numerous real-world customer case stories illustrating the progress Google Cloud customers are making in implementing GenAI:

Bayer: Building a radiology platform to assist radiologists, improving efficiency and diagnosis turnaround time. This platform aims to streamline the diagnostic process, leveraging AI to enhance the accuracy and speed of radiological assessments.

Best Buy: Leveraging the Gemini LLM to create virtual assistants for troubleshooting, rescheduling deliveries, and more. These AI-driven solutions are designed to enhance customer experience and operational efficiency.

Discover Financial: Empowering nearly 10,000 customer care agents with generative AI capabilities to improve productivity and customer satisfaction. By automating routine tasks and providing real-time assistance, Discover Financial aims to significantly boost agent performance and customer service quality.

Mercedes Benz: Enhancing its online storefront and customer interactions with AI-powered recommendations, search, and personalized campaigns. This initiative is part of a broader strategy to integrate AI across various customer touchpoints, improving overall customer engagement and satisfaction.

Orange: Expanding its partnership with Google Cloud to deploy generative AI across its operations, improving customer experience and workforce productivity. This collaboration focuses on leveraging AI to streamline operations and enhance service delivery in the telecommunications sector.

And there are 96 more where those came from! Check out the Google Cloud customer use cases blog post for the rest.

Key Takeaways

Long story short, this was another impressive Google Cloud Next in my book.

The company articulated a bold vision for generative AI in the enterprise in the form of Agents, and strongly supported that vision through demonstrations, customer stories, and a full slate of product announcements and updates.

The company is delivering on that vision with what is amounting to the industry’s only full-stack AI offering, spanning silicon to Agents vertically, and research to production horizontally, powered by a full complement of best-in-class first party models and an open catalog of third party models

And, the company demonstrated that it has its finger on the pulse of emerging enterprise AI use cases and requirements with its numerous new features to enable RAG and vectorization across products.

I also saw evidence of a continued emphasis on, and success with, both delivery and technology partners throughout the event.

Further, in my briefings with company executives, I continue to see that they are very customer focused and have strong execution-oriented leadership in place. (This wasn’t always true for Google Cloud.)

Opportunities

Many of the key challenges Google Cloud will continue to face are a direct result of the speed at which they’re executing in AI.

The pace of announcements during the keynotes was notable, for sure, but also a bit overwhelming, even for a seasoned industry-watcher such as myself. Google will need to work hard to ensure that customers and partners can keep up with this rapid pace and effectively integrate these new tools into their operations.

The same challenge is present internally as well, and the company will need to continue investing in training its sales and customer facing teams to ensure they stay up to date on all the latest technology.

And then there’s product. The Agent vision rolled out at Next required a large charge on the credit card Google Cloud has taken out with the Bank of Customer Trust. Sure, they didn’t finance the whole vision—the Models are state of the art, the Agent Builder is a respectable start, and many of the other pieces are in place—but these are early days for these products and rough edges are not hard to find once you start using them. To pay down this debt, Google Cloud will need to buckle down and deliver on a ton of product, integration, documentation, and education grunt work to enable customers and partners to have a shot at successfully building and deploying their own AI Agents. And the temptation to charge more shiny new things isn’t going anywhere.

I’ll be keeping my eyes on the statement balance and reporting back on Google Cloud’s credit score when Next returns to Las Vegas in April of 2025.

For more real-time updates and insights, follow me on X.

Just boarded for Las Vegas for #GoogleCloudNext and will be flying through the eclipse. What should I be worried about?

— Sam Charrington (@samcharrington) April 8, 2024

Great to see @googlecloud promoting its relationships with innovative #AI startups at #GoogleCloudNext @ajratner @c_valenzuelab @agermanidis @hwchase17 any of you guys around? pic.twitter.com/1ob39Os59E

— Sam Charrington (@samcharrington) April 9, 2024

Excited to be here at the #GoogleCloudNext keynote. TK about to get things kicked off! pic.twitter.com/wHO1x7mgW0

— Sam Charrington (@samcharrington) April 9, 2024

Ecosystem and AI already teed up in the opening moments of the keynote. #GoogleCloudNext @googlecloud pic.twitter.com/p5fUUskVau

— Sam Charrington (@samcharrington) April 9, 2024

Product announcements starting at the infrastructure level with Amin Vahdat talking through various compute updates. Literally running through them at one every 5 seconds. It's insane. Focus is on the elements of their infra stack aka AI Hypercomputer. #GoogleCloudNext… pic.twitter.com/voMlSA8kIo

— Sam Charrington (@samcharrington) April 9, 2024

Big news on the compute side, new Google Axion Processors. 50% better performance vs x86 #GoogleCloudNext @googlecloud pic.twitter.com/99u5QhvM44

— Sam Charrington (@samcharrington) April 9, 2024

"With these additions, Google Cloud continues to be the only cloud provider to offer first-class first party, third party and open source AI models."

— Sam Charrington (@samcharrington) April 9, 2024

– TK#GoogleCloudNext @googlecloud

(Finally! Been waiting for this for a year…)

— Sam Charrington (@samcharrington) April 9, 2024

Google announces grounding (eg RAG) with the Google Search knowledge graph!

Is this a @perplexity_ai killer?#GoogleCloudNext @googlecloud pic.twitter.com/8mrLzd2MX4