To hear about Josh Tobin’s various projects in robot learning, check out the full interview!

The discussion on projects outside of the NeurIPS paper picks up at 15:58 in the podcast. Enjoy!

Robots have a particularly hard time with “reading a room.” In order to know how to act, they first need to understand their environments. It’s like learning where everything is when you enter a grocery store for the first time. For machines, processing the world in terms of three dimensional spatial awareness–figuring out where objects are, how they are positioned, and even the robot’s own placement in the environment–is incredibly complex. In the real-world, scenarios can be unpredictable and challenging to simulate, so how can we improve perception so machines can successfully understand the world around them?

Josh Tobin has dedicated his research to improving robots’ ability to accomplish real-world tasks. He finished his PhD at UC Berkeley under Pieter Abbeel, who we had the opportunity to interview for a Reinforcement Learning Deep Dive. Josh recently took a break from his role at OpenAI and joins us from NeurIPS 2019, where he presented his paper on Geometry Aware Neural Rendering, where they successfully improve 3D modeling to more complex, higher dimensional scenes.

Neural Rendering and Generative Query Networks

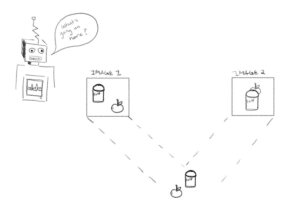

Neural rendering is the practice of observing a scene from multiple viewpoints and having a neural network model render an image of that scene from a different, arbitrary viewpoint. For example, if you have three cameras taking in different perspectives of an environment, the model would use that information to reconstruct a fourth viewpoint. The intuition behind this process is that the model has demonstrated a good understanding of the space if it can predict a strong representation of that view.

Tobin’s work is an extension of the ideas presented in a paper from DeepMind on Neural Scene Representation and Rendering. The paper introduces Generative Query Networks (GQN), which the DeepMind paper refers to as “a framework within which machines learn to represent scenes using only their own sensors.” The significance of GQNs is that they do not rely on a massive amount of human-labeled data to produce scene representations. Instead, the model gleans the “essentials” of a scene from images, “constructs an internal representation, and uses this to predict the appearance of that scene.”

In GQN, they take the problem of neural rendering and set up a model structure that works with an encoder-decoder architecture. As Josh describes, “The encoder takes each of the viewpoints and maps them through a convolutional neural network independently, so you get a representation for each of those viewpoints. Those representations are summed.” This creates a representation for the entire scene which is then passed on to the decoder. “The decoder’s job is to…go through this multi-step process of turning [the representation] into what it thinks the image from that viewpoint should look like.”

GQNs are a powerful development, but there is still a bottleneck that occurs when the representation produced by the encoder is passed to the decoder. This is where the geometry aware component (the main contribution of Tobin’s paper) comes in.

Geometry Awareness: Attention Mechanism and Epipolar Geometry

Josh’s primary goal “was to extend GQN to more complex, more realistic scenes” meaning, “higher-dimensional images, higher-dimensional robot morphologies, and more complex objects.”

Their approach was to use a scaled dot-product attention mechanism. “The way the attention mechanism works is by taking advantage of this fact of 3D geometry called epipolar geometry,” which refers to viewing something from two points and defining the relationship between them. In this case, epipolar geometry refers to knowing “the geometry of the scene, so where the cameras are relative to one another.”

If you’re a machine trying to render an image from a particular viewpoint, you want to “go back and look at all of the images that you’ve been given as context, and search over those images for relevant information. It turns out, if you use the geometry of the scene [epipolar geometry]… then you can constrain that search to a line in each of the contexts viewpoints” and attend to the pixels that are most relevant to the image you’re constructing.

“For each pixel, we’re constructing this vector that represents a line. When you aggregate all of those you have two spatial dimensions for the image. So you get this 3D tensor and you’re dot-producting the image that you’re trying to render…and that’s the attention mechanism.”

The New Data Sets

In order to evaluate the performance of the new model they developed several new data sets:

In-Hand OpenAI Block Manipulation.

This is a precursor to Open AI’s Rubik’s Cube project. In this data set, “You have a bunch of cameras looking at a robot hand that’s holding a block. The colors of the cube, the background, and the hand are randomized and the hand and the cube can be in any pose.”

Disco Humanoid.

This is Josh’s term for the data set because it looks like a “humanoid model that’s doing crazy jumping-in-the-air dance moves.” It’s similar to the MuJoCo humanoid model except that the colors, poses of the joints, and the lighting are completely randomized. It’s meant to test “whether you can model robots that have complex internal states rates with this high-dimensional robot that you need to model in any pose.”

Rooms-Random-Objects.

The most challenging data set they introduced involved simulations of a room with objects taken from ShapeNet, a data set with over 51,000 3D models. “Each of the million scenes that we generated had a different set of objects placed in the scene. It’s really challenging because the model needs to understand how to render essentially any type of object.”

Randomization is a key part in each data set. As Josh believes “If you want to train a model and simulation that generalizes the real world, one of the most effective ways of doing that is to massively randomize every aspect of the simulator.”

Evaluating Performance and Results

To evaluate their results, the team compared their model with GQN using several metrics, including the lower bound on the negative log likelihood (the ELBO), per-pixel mean absolute error (L1 and L2), and by qualitatively reviewing rendered images of actual scenes.

“The main result of the paper is we introduced a few new data sets that capture some of those properties, and then we showed that our attention mechanism produces qualitatively and quantitatively much better results on new, more complex datasets.”

They have yet to test the system in the real world. Josh confirms “right now, it only captures 3D structure, it doesn’t capture semantics, or dynamics, or anything like that, but I think it’s an early step along that path.”

Josh is working on various related projects around data efficiency in reinforcement learning and some fascinating work on sim-to-real applications.