Deep reinforcement learning (DRL) is an exciting area of AI research, with potential applicability to a variety of problem areas. We’ve discussed DRL several times on the podcast to date and just this week took a deep dive into it during the TWIML Online Meetup. (Shout out to everyone who attended!) Our presenter, Sean Devlin, did a great job explaining the major ideas underlying DRL. If this week’s newsletter inspires you to dig more deeply into how RL works, the meetup recording, which will be posted shortly, would be a good place to start.

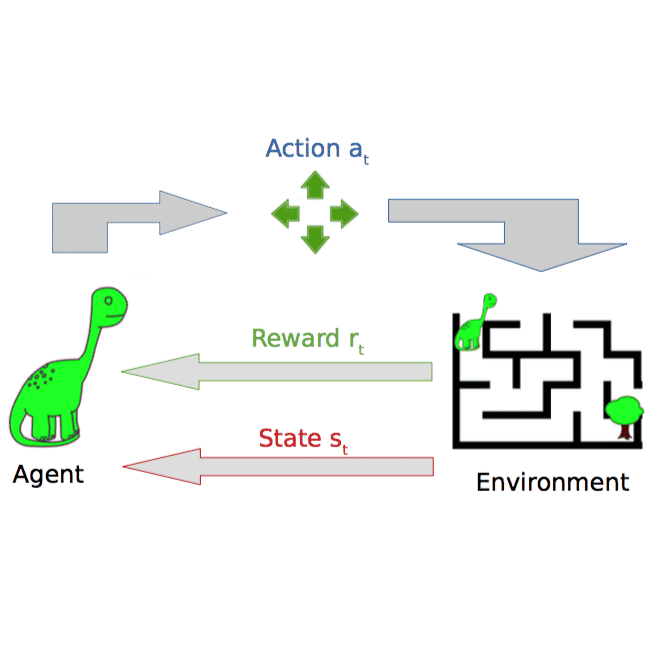

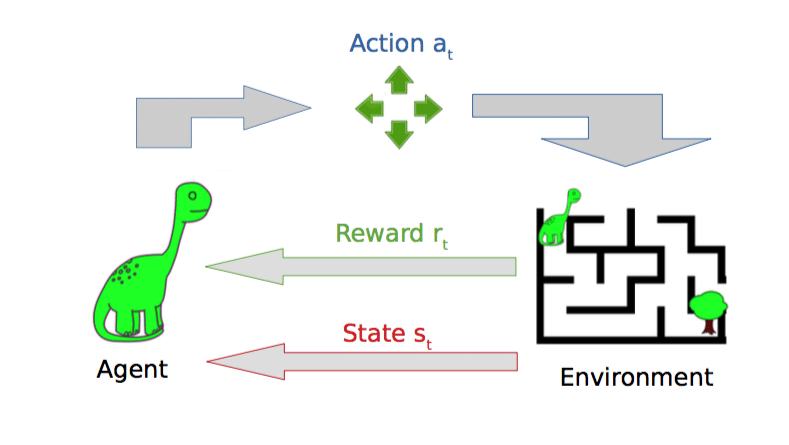

First, a quick refresher on the basic idea behind reinforcement learning. Unlike supervised machine learning, which trains models based on known-correct answers, in reinforcement learning the model is trained by having an agent interact with an environment. When the agent’s actions produce desired results–for example, scoring a point or winning the game–the agent gets rewarded. Put simply, the agent’s good behaviors are reinforced.

Credit: Sean Devlin, for March 2018 TWIML Online Meetup

One of the key challenges in applying DRL to non-trivial problems is in constructing a reward function that encourages desired behaviors without undesirable side effects. Another important factor is the tradeoff between taking advantage of what the agent has already learned (exploitation) and investigating new behaviors or new parts of the environment (exploration).

It might be worth noting here that while deep reinforcement learning–“deep” therein referring to the fact that the underlying model is a deep neural network–is still a relatively new field, reinforcement learning has been around since the 70s, or earlier, depending on how you count. As Andrej Karpathy points out in his 2016 blog post, pivotal DRL research such as the AlphaGo paper and the ATARI Deep Q-Learning paper are based on reinforcement learning algorithms that have been around for a while, but with deep learning swapped in instead of other ways to approximate functions. Their use of deep learning is of course enabled by the explosion in inexpensive compute power we’ve seen over the past 20+ years.

Some people view DRL as a path to artificial general intelligence, or AGI, because of how it mirrors human learning, that is, exploring and receiving feedback from environments. Recent successes of DRL agents in besting human players in playing video games, the well-publicized defeat of Go grandmaster at the hands of DeepMind’s AlphaGo, and demonstrations of bipedal agents learning to walk in simulation have all contributed to the enthusiasm about the field.

The promise of DRL, along with Google’s 2014 acquisition of DeepMind for $500 million, has led to the formation of a number of startups hoping to capitalize on this technology. I’ve previously interviewed Mark Hammond, a founder of Bonsai, which offers a development platform for applying deep reinforcement learning to a variety of industrial use cases, and Pieter Abbeel, a founder of Embodied Intelligence, a still-stealthy startup looking to apply VR and DRL to robotics. Osaro, backed by Jerry Yang, Peter Thiel, Sean Parker, and other boldface-named investors, is also looking to apply DRL in this space. Meanwhile, Pit.ai is seeking to best traditional hedge funds by applying DRL to algorithmic trading, and DeepVu is applying DRL to the challenge of managing complex enterprise supply chains.

As a result of increased interest in DRL, we’ve also seen the creation of new open-source toolkits and environments for training DRL agents. Most of these frameworks are essentially special-purpose simulation tools or interfaces thereto.

Here are some of the new open-source toolkits and environments I’m tracking:

OpenAI Gym.

- OpenAI Gym is a popular toolkit for developing and comparing reinforcement learning models. Its simulator interface supports a variety of environments including classic Atari games, and robotics and physics simulators like DARPA-funded Gazebo, and MuJoCo. Like other DRL toolkits, it offers APIs to feed observations and rewards back to agents.

DeepMind Lab

- DeepMind Lab is a 3D learning environment based on the Quake III first-person shooter video game, offering up navigation and puzzle-solving tasks for learning agents. DeepMind recently added DMLab-30, a collection of new levels, and introduced their new IMPALA distributed agent training architecture.

Psychlab

- Another DeepMind toolkit, open-sourced earlier this year, Psychlab extends DeepMind Lab to support cognitive psychology experiments like searching an array of items for a specific target or detecting changes in an array of items. Human and AI agent performance on these tasks can then be compared.

House 3D

- A collaboration between Berkeley and Facebook AI researchers, House3D offers over 45,000 simulated indoor scenes with realistic room and furniture layouts. The primary task covered in the paper that introduced House3D was “concept-driven navigation,” e.g. training an agent to navigate to a room in a house given only a high-level descriptor like “dining room.”

Unity Machine Learning Agents.

- Under the stewardship of VP of AI and ML Danny Lange, game engine developer Unity has been making an effort to incorporate cutting-edge AI technology into their platform. Unity Machine Learning Agents, released last fall, is an open-source Unity plugin that enables games and simulations running on the platform to serve as environments for training intelligent agents.

Ray

- While the other tools listed here focus on DRL training environments, Ray is more about the infrastructure of DRL at scale. Developed by Ion Stoica and his team at the Berkeley RISELab, Ray is a framework for efficiently running Python code on clusters and large multi-core machines, specifically targeted at providing a low-latency distributed execution framework for reinforcement learning.

The advent of all these tools and platforms will make DRL more accessible to developers and researchers. They’ll need all the help they can get, though, because deep reinforcement learning can be challenging to put into practice. A recent critique by Google engineer Alex Irpan, in his provocatively-titled article, “Deep Reinforcement Learning Doesn’t Work Yet,” explains why. Alex cited the large amount of data required by DRL, the fact that most approaches to DRL don’t take advantage of prior knowledge about the systems and environments involved, and the aforementioned difficulty in coming up with an effective reward function, among other issues.

I expect deep reinforcement learning to continue to be a hot topic in the AI field, both from the research and applied perspectives, for some time. It has shown great promise at handling complex, multifaceted and sequential decision-making problems, which makes it useful not just for industrial systems and gaming, but fields as varied as marketing, advertising, finance, education, and even data science itself.

Are you working on deep reinforcement learning? If so, I’d love to hear how you’re applying it.