AI is fundamentally shifting the way users interact with their devices and data. From LLMs powering intelligent assistants to generative audio, video, and image applications, these experiences are computationally intensive in ways that traditional computing workloads never were. As a result, much of what determines user productivity and satisfaction, as well as what’s possible for ISV applications and innovation, is gated behind compute performance.

The promise of local, on-device AI inference is compelling: lower latency, privacy by design, no recurring API costs, and functionality that works regardless of connectivity. But that promise only materializes if the silicon can actually deliver the performance needed to run these workloads, and if the applications that end-users count on evolve to take advantage of them.

With this in mind, it’s no surprise to see Qualcomm continuing to invest heavily in increasing the performance and capabilities of its platforms for AI workloads. The latest advances were on display at the recent Snapdragon Summit in Maui, which I had the opportunity to attend. What struck me most about the company’s AI story wasn’t just the year-over-year performance gains, but how quickly the ecosystem is growing to take advantage of them. The implications for practical deployment of on-device intelligence in both consumer and enterprise contexts are significant. (Disclosure: My travel expenses were covered by Qualcomm.)

Platform Updates: Mobile and PC

The company announced updates to its processor lineup for both mobile and laptop devices. The latest versions are now the Snapdragon 8 Elite Gen 5 for mobile and Snapdragon X2 Elite for PCs.

While these refreshed platforms feature improvements across all three main processing units—the Oryon CPU, Adreno GPU, and Hexagon NPU—from an AI inference perspective, the NPU numbers are what matter most.

This distinction can be a bit confusing for those familiar with data center AI, where GPUs dominate inference workloads. Processors specialized for AI workloads like Google’s TPU or custom accelerators from companies like Graphcore and Cerebras haven’t gained much traction in that market relative to NVIDIA’s GPU offerings. On Snapdragon mobile and PC devices, however, specialized NPUs offer faster, more power-efficient AI inference, while GPUs primarily serve in the more traditional role of supporting games and other graphics-intensive applications.

For mobile devices, Snapdragon 8 Elite Gen 5 features a re-architected Hexagon NPU which delivers up to 37% faster performance with up to 16% better power efficiency compared to its predecessor.

For Snapdragon X2 Elite PCs, the Hexagon NPU features significantly increased performance, topping out at about 80 tensor operations per second (TOPS)—nearly double the performance of last year’s platform—and supporting LLM operations at up to 220 tokens per second.

"Speed is the moat. The question is how are we anchoring that in this new generation of chip."

— Sam Charrington (@samcharrington) September 25, 2025

Double digit growth in speed and performance per watt in a single generation helps!

Vinesh Sukumar, VP Product Mgmt, AI/GenAI @Qualcomm #snapdragonsummit2025 @Snapdragon… pic.twitter.com/G45JwSkLbK

The Ecosystem Story

NPU performance gains only matter if applications actually take advantage of them. The partner presentations at the Summit demonstrated that developers are increasingly adapting their applications to utilize the Snapdragon NPU.

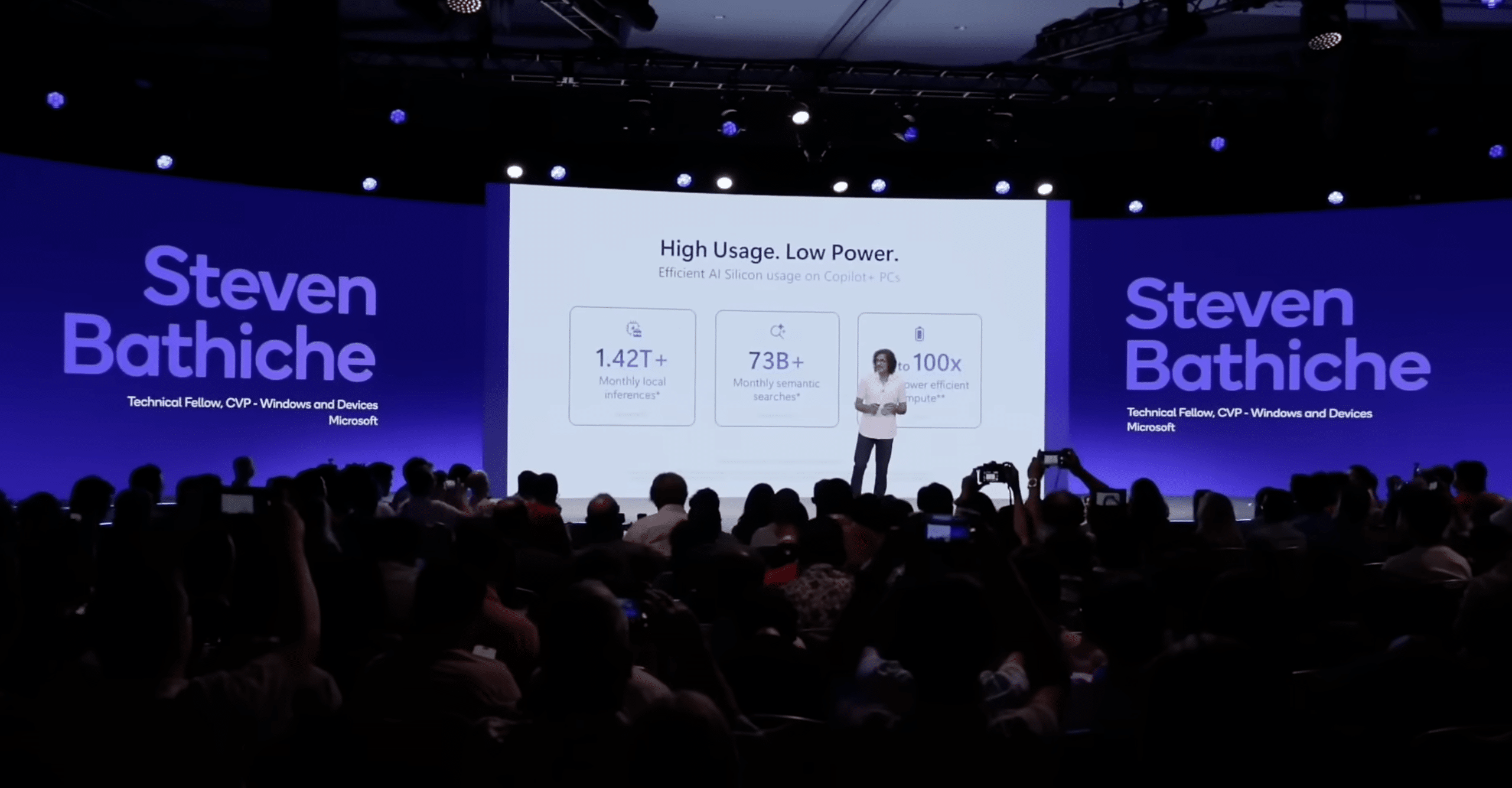

Major partners including Samsung and Microsoft took to the stage to discuss AI and NPU integration in their products. Stevie Bathiche, Technical Fellow and CVP at Microsoft, shared particularly compelling stats while presenting on the evolution of edge computing and the future of the PC:

- Since the launch of Copilot+ PCs last year, the platform is now powering up to 1.4 trillion AI inference operations per month. That’s a significant volume of inferences not hitting data centers.

- He also shared a stat demonstrating 100x greater power efficiency for Snapdragon inference based on their testing. The numbers are based on April 2024 testing that pitted pre-release Copilot+ PC builds with Snapdragon 10- and 12-core configurations (QNN build) against Windows 11 PCs with NVIDIA 4080 GPUs (CUDA build). This dramatic efficiency advantage helps explain the rapid growth in on-device inference adoption.

In a later session, we heard from Divya Venkataramu, Windows Developer Platform PMM at Microsoft, who demonstrated some of the concrete ways that NPU-accelerated AI is improving the Windows experience on Copilot+ PCs.

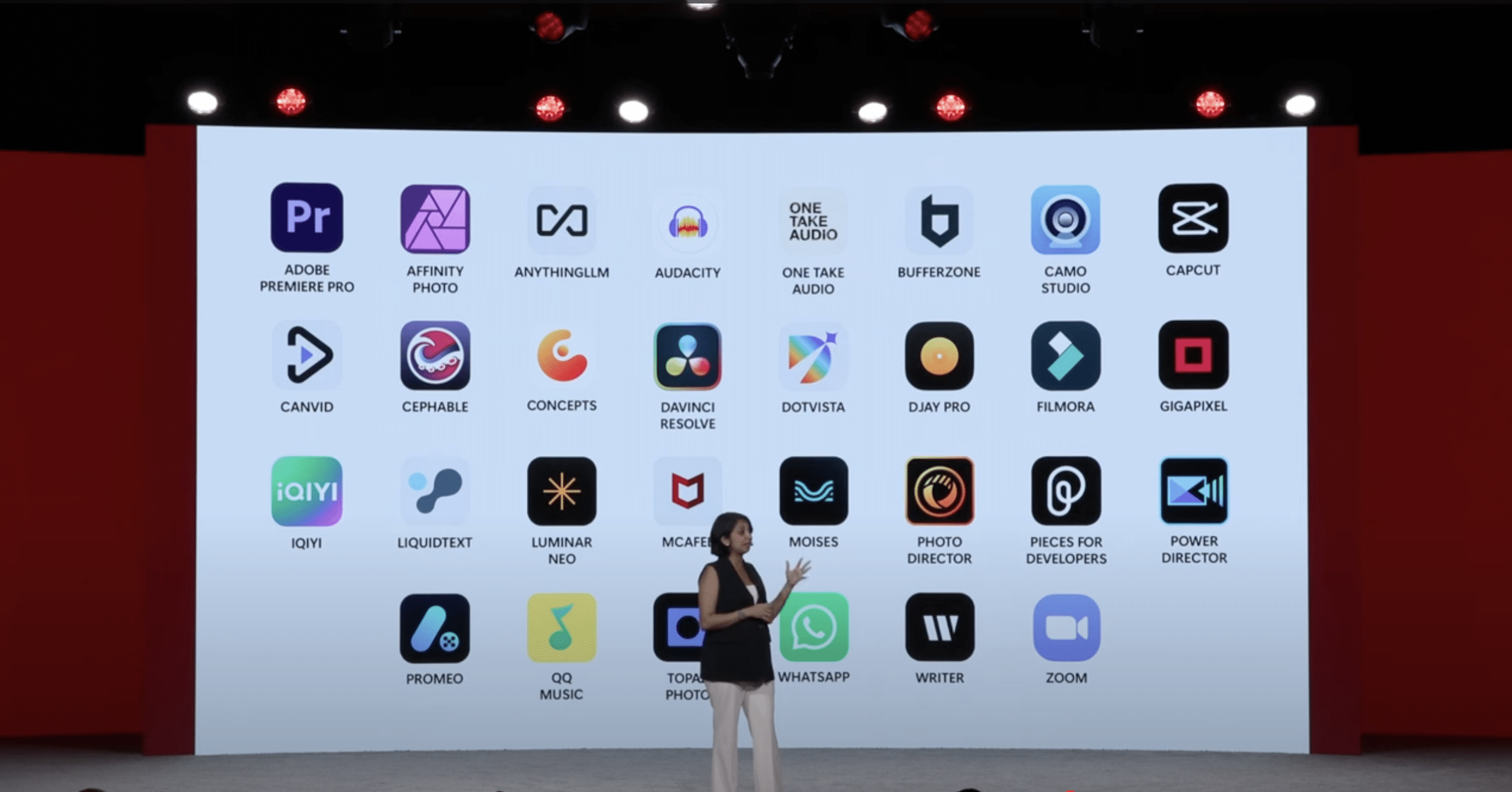

Beyond Microsoft’s own use of the NPU, however, Divya’s presentation profiled a number of ISVs whose products benefit from local inference running on NPUs.

These included quite a few popular ISV applications for Windows, such as Adobe Premiere, Affinity Photo, CapCut, Camo Studio, DaVinci Resolve, Wondershare Filmora, WhatsApp, and Zoom, which all now leverage the NPU for various AI features.

Wondershare implemented a variety of AI effects for its Filmora video editing application. By taking advantage of the Hexagon NPU, they achieve 25% faster rendering performance than the CPU. And Topaz Labs optimized over 30 custom AI models for Hexagon in Topaz Studio, their pro image and video editing application, enabling features like upscaling, image and video enhancement, photo restoration, and more. They found that their models run 30% faster on NPU vs CPU+GPU on the same device.

The breadth of ISV adoption was notable, spanning consumer and professional creativity apps, productivity, and collaboration platforms. It’s a strong signal that the ecosystem is maturing around NPU acceleration.

What This Means for Enterprises, OEMs, and ISVs

The Summit’s announcements and demonstrations surface several important implications for those building or buying AI-enabled systems:

- Local models are now a viable inference option. With 80 TOPS NPUs on PC and substantially faster NPUs on mobile, we’re getting closer to being able to build AI agents that feel collaborative rather than clunky, and that enable real-time AI effects in creative and communications applications. Even hybrid architectures combining cloud and edge inference could be improved by devices that can handle “last-mile” perception, personalization, and action loops without round-trips.

- Developer tooling is maturing. The general availability of Windows ML (announced the same week as the Summit) alongside Qualcomm’s maturing support for existing tools like the ONNX Runtime, Pytorch ExecuTorch, TensorFlow Lite and Qualcomm AI Engine Direct, means targeting Snapdragon NPUs, especially those running Windows, is easier than than a year ago. The “write once, accelerate where available” story still isn’t perfect, but it’s trending in the right direction—and critically, the addressable installed base of NPU-enabled devices is growing rapidly.

- Power efficiency matters as much as peak performance. Sustained, on-battery performance is increasingly the bar that separates “cool demo” from “shippable feature.” Qualcomm’s efficiency gains—both mobile and PC—directly translate into more headroom for continuous, always-on AI capabilities without destroying battery life or requiring active cooling that degrades the user experience.

Challenges Ahead

While edge inference offers clear advantages in a number of targeted use cases, a number of which were demonstrated at the Summit, a number of significant challenges remain for Qualcomm to realize its vision of being a key:

- The SaaS headwind. The broader industry trend towards cloud-based SaaS applications works against the on-device AI narrative. As more applications move to the browser and rely on cloud backends, the opportunity to leverage local AI inference diminishes. Qualcomm needs developers to buck this trend and build experiences that leverage edge capabilities.

- The missing killer app. Edge AI still lacks its “killer app,” offering experiences or benefits so compelling that it drives device purchasing decisions. Photo enhancement and other media tools are useful, but they’re not yet moving the needle the way messaging or mobile photography did in previous platform transitions.

- Executing across fragmented markets. Mobile, PC, automotive, and industrial/IoT each require different AI capabilities and go-to-market strategies. Serving all these markets simultaneously stretches engineering resources and complicates the platform story for developers.

From “Intel Inside” to “AI Everywhere”

In the 1990s, “Intel Inside” taught consumers to care about the chip. Qualcomm is attempting a version of that play, complete with prominent Mercedes-AMG Formula One and Manchester United team sponsorships, for the AI era. It’s a difficult challenge: building brand awareness is hard enough; translating that into recognized value requires making the promise tangible through faster AI effects, smarter assistants, and better performance for AI apps yet to come, while keeping devices cool and quiet and delivering all-day battery life.

This year’s Summit demonstrated progress on both the silicon and software fronts. The performance improvements were substantial, and the breadth of ISV adoption signals genuine ecosystem momentum. The 1.4 trillion monthly inferences Microsoft cited aren’t just a vanity metric; they represent AI workloads that have been pulled from the cloud to the edge, enabled by the kind of efficiency gains that Snapdragon aims to deliver.

.@Qualcomm CEO @cristianoamon takes the @Snapdragon Summit stage to lay out his AI Everywhere vision for the future. Excited to be here!#SnapdragonSummit #SnapdragonSummit2025#PaidTravel_Snapdragon pic.twitter.com/wtLIO821Bl

— Sam Charrington (@samcharrington) September 24, 2025

AI Everywhere technical challenges that need new solutions. Will encompass models, OS, silicon & more.@Snapdragon @cristianoamon @Qualcomm

— Sam Charrington (@samcharrington) September 24, 2025

#SnapdragonSummit2025 #PaidTravel_Snapdragon pic.twitter.com/OnVDg2iLQP

Love @cristianoamon and @rosterloh reminiscing about the first Android phone, the G1 / HTC Dream. (I had one and loved it.) @Snapdragon #SnapdragonSummit2025 #PaidTravel_Snapdragon pic.twitter.com/EFxHGZTweT

— Sam Charrington (@samcharrington) September 24, 2025

Edge isn't just a source of compute, but also a node for accessing and processing the user's most important data.@Snapdragon @cristianoamon @Qualcomm#SnapdragonSummit2025#PaidTravel_Snapdragon pic.twitter.com/H1IF6OI2YT

— Sam Charrington (@samcharrington) September 24, 2025

Divya Venktaramu showing some of the ways @Microsoft and partners are taking advantage of the @Snapdragon NPU to create new experiences capabilities for users@filmora_editor - video editing 25% faster on NPU@topazlabs - photo editing w 34 models on NPU@OneTakeAudio -… pic.twitter.com/9inV9Z9wUN

— Sam Charrington (@samcharrington) September 25, 2025

.@AnythingLLM demos new mobile app and Distributed Inference feature at #snapdragonsummit2025. User runs AnythingLLM client on their various @snapdragon devices and inference is automatically done on most appropriate connected device.#paidtravel_snapdragon pic.twitter.com/7QSFB4YByS

— Sam Charrington (@samcharrington) September 25, 2025

Token rate is critical to customer experience. Latest gen platform delivers 220 tps for 3B parameter model.

— Sam Charrington (@samcharrington) September 25, 2025

Vinesh Sukumar, VP Product Mgmt, AI/GenAI @Qualcomm #snapdragonsummit2025 @Snapdragon #paidtravel_snapdragon pic.twitter.com/y43gz0JHlx

Samsung using @Snapdragon NPU to enable broad array of Gen AI capabilities on-device including image, audio and camera apps.#SnapdragonSummit2025 @Qualcomm #paidtravel_snapdragon pic.twitter.com/ORYjd1pXWu

— Sam Charrington (@samcharrington) September 24, 2025

A timeline of on-device AI capabilities. Innovation is accelerating, enabling new user experiences across new device form factors.@sbathiche Tech Fellow & CVP @Microsoft presenting at #snapdragonsummit2025.

— Sam Charrington (@samcharrington) September 24, 2025

@snapdragon @Qualcomm #paidtravel_snapdragon pic.twitter.com/qXsCb2RyuV