Hi there,

It was worth the wait, this newsletter number eight!

Oh so Adversarial, Part I

“Adversarial” is a hot term in deep learning right now. The word comes up in two main contexts: I’ll call them adversarial training and adversarial attacks here to be clear. You’ll also hear ambiguous terms like “adversarial machine learning” used, but no worries… after the brief explainer in this and a future newsletter, you’ll easily be able to distinguish the two based on context.

Let’s start with adversarial training. Adversarial training is a pretty nifty idea. Basically what we’re doing with adversarial training is pitting two neural networks against each other so that one helps train the other. The idea was popularized by a good fellow named Ian in what he called Generative Adversarial Networks (GANs), in 2014. (This week’s podcast guest, Jürgen Schmidhuber, explored a similar idea in a 1992 paper, while at UC Boulder.)

GANs apply the adversarial training idea to the creation of new stuff. In a GAN you have two neural nets: a generator that is trying to create real-looking stuff and a discriminator that can tell real-looking stuff from fake looking stuff. The discriminator is trained using real, labeled data (i.e. supervised learning), and then it trains the generator (i.e. unsupervised learning).

Adversarial learning was the topic of the first TWIML Online Meetup, which was held a couple of weeks ago and for which the video archive is now available.

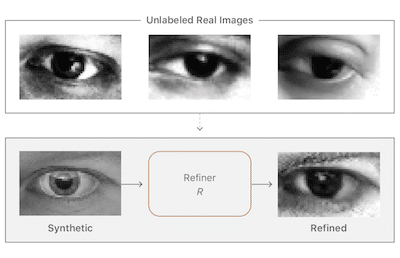

The focus of the meetup was discussing Apple’s CVPR best-paper-award-winner “Learning From Simulated and Unsupervised Images through Adversarial Training.” Consider a problem like eye gaze detection in the context of the GANs I just described. You’ve got a picture from, for example a cell phone camera, and you want to determine which way the user is looking. Generating enough labeled eye gaze training data to train a robust detector is hard and expensive. Generating simulated eye gaze training data sets, is much easier and cheaper, though. (For example, we can use something like a video game engine.)

The problem is that the simulated eye gaze images don’t look close enough to real images to train a model to work effectively on real data. This paper proposes using a Generative Adversarial Network to train a “refiner” network (i.e. a generator) that can make simulated eye gaze images look like real eye gaze images while preserving the gaze direction, with pretty good results. Cool stuff!

Thanks again to meetup members Josh Manela, who did a great job presenting this paper, and Kevin Mader, for walking through a TensorFlow implementation of the model that he created! You guys are just awesome!

Our next meetup will be held on Tuesday, September 12th at 3 pm Pacific Time. Our presenter will be Nikola Kučerová, who will be leading us in a discussion of one of the classic papers on recurrent neural nets, Learning Long-Term Dependencies with Gradient Descent is Difficult. Visit twimlai.com/meetup for more details, to register, or to access the archives.