I want to talk about a paper published this week by some folks in Geoffrey Hinton’s group at the University of Toronto. You’ll recall I mentioned Hinton last time when discussing some of the seminal papers in deep learning for image recognition. The paper, by Jimmy Lei Ba, Jamie Ryan Kiros and Hinton, introduces a new technique for training deep networks called Layer Normalization.

One of the big challenges for folks doing deep learning is the amount of time and compute power required to train their models. There are lots of ways we’re trying to deal with this issue. We can make faster hardware, like the TITAN X discussed earlier or Google’s custom TPU chip, which stands for Tensor Processing Unit, which we discussed on the May 20th show. We can distribute the training over more computers and we’ve seen efforts to do this like the work of Jeff Dean and his team at Google Research. Or we can try to tweak the algorithms themselves so that they train faster.

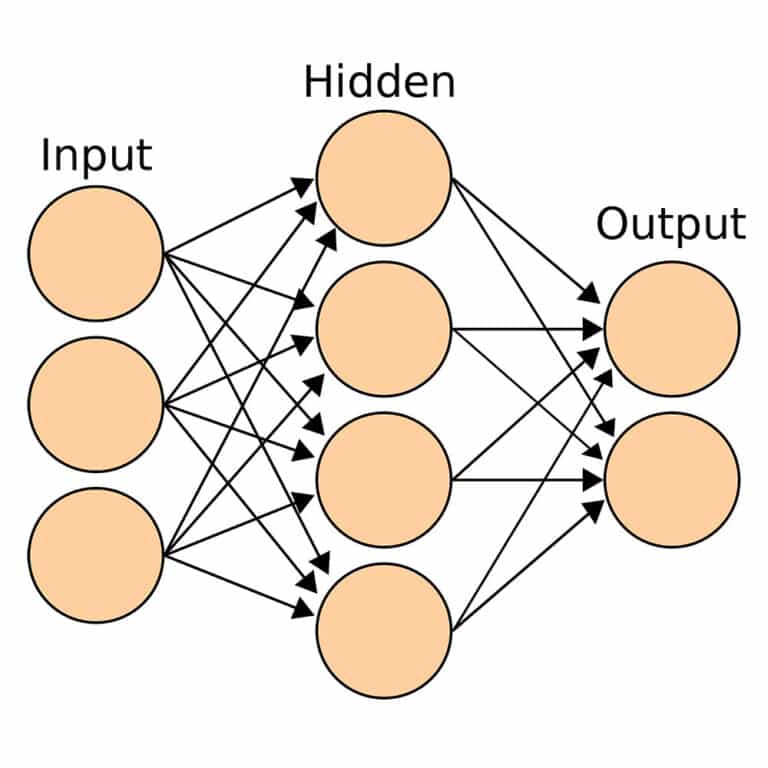

Early last year Sergey Ioffee and Christian Szegedy, also from Google, published a technique they developed for doing this called Batch Normalization. The idea with Batch Normalization is this: with neural networks, the inputs to each layer are affected by the parameters of all the preceding layers, so as the network gets deeper, small changes to these parameters get amplified by the later layers. This causes a problem because it means that the inputs to the various layers tend to shift around a lot during training, and the network spends a lot of time learning how to adapt to these shifts as opposed to learning our ultimate goal which is the relationship between the network’s input and the training labels. What Ioffe and Szegedy came up with was a way to normalize the input data while the network is being trained in such a way as to ensure a more constant statistical distribution of layer inputs. This in turn accelerates training of the network. They basically did this by normalizing the summed inputs to each hidden neuron in the network on a batch by batch basis.

The Batch Normalization approach worked very well and has become the state of the art for training Convolutional Neural Nets, but it didn’t apply very well to Recurrent Networks, or when the batch size needed to be small, such as in an online learning scenario where you’re training in batches of 1.

With Layer Normalization, instead of normalizing the inputs to each hidden neuron in batches, we normalize across the inputs on a layer-by-layer basis at each time step. Like batch normalization, this stabilizes the dynamics of the hidden layers in the network and accelerates training, without the limitation of being tied to a batched implementation.

The team put the Layer Normalization approach to the test under 6 different RNN tasks and basically found that LN works well for those RNN cases where BN doesn’t, in terms of converging faster in training, but that BN is still best for training ConvNets.

A Theano based implementation of the algorithm was published with the paper, and code for a Keras-based implementation has since been posted to github by Ehud Ben-Reuven.

It’s pretty cool how quickly the latest research papers are being implemented on the various deep learning frameworks. One github repo I came across this week that’s pretty interesting in that regard is the LeavesBreathe TensorFlow with Latest Papers repo. This repo holds implementation of the latest RNN and NLP related papers, including the

– Highway Networks paper

– The Recurrent Highway Networks paper, the preprint of which was just posted to arXiv last week

– Multiplicative Integration within RNNS

– Recurrent Dropout Without Memory Loss

– And the GRU Mutants paper