Who is Edward Raff?

Edward Raff works as a head scientist at the consulting firm Booz Allen Hamilton (BAH). As Edward describes it, their business model is “renting out people’s brains” to business and government organizations. Edward sees BAH research as both a way to establish expertise in their field and a way to train staff in how to solve interesting problems.

Introduction to Malware and Machine Learning

The interesting thing about using machine learning to detect malware is that it’s a totally different problem than other forms of ML. Edward describes other ML applications, like computer vision or language processing, as “things near each other are related,” meaning that a model is trained to recognize similar things and distinguish things that are different.

Malware is a totally different ball game. With malware, an algorithm isn’t learning to distinguish pixels or words, it’s learning to recognize code, which can come in a number of different forms. In addition, the scale of data used to train malware is way larger than other ML applications. To give you an idea of the scale at play, a single data point a model is trained on can be an entire application that is 30MB large. Since each case is so unique and the data is so large and varied, cybersecurity is still figuring out best practices when it comes to data collection and data sets.

“The malware author, they don’t need to abide by the rules. That’s part of the whole point, is they’re trying to break the rules. If there’s a spec that says, ‘Oh, you don’t set this flag in the executable because it will behave poorly.’ Well, if that helps the malware author, they’re going to do it.”

Fighting Malware with Adversarial Machine Learning

Recent work in the malware detection field has focused on the use of machine learning models to detect malicious code. ML has the advantage of being more robust than the static rulesets traditionally used to detect malware, but it is still susceptible to the game of cat-and-mouse that plagues the field, wherein bad actors aren’t static, but rather continually modify their code to try to evade detection.

In addition, machine learning approaches open up a new vector of evasion, namely adversarial attacks against the models themselves, in which noise or other patterns are created and injected into the input data with the goal of causing the model to make an incorrect classification.

Generally, the best defense against adversarial attacks is a technique called adversarial training. Since 2017, this has been established in the literature as the most effective way to train models to prevent adversarial attacks.

A common approach to evaluating the effectiveness of adversarial attacks in cybersecurity research papers is to assume that an attacker has access to the same data and classes as the software they’re aiming to attack. Edward takes issue with this approach because in practice there’s no reason malware authors would have access to the same training data and labels as the “victim” code.

In Edward’s most recent paper, “Adversarial Transfer Attacks With Unknown Data and Class

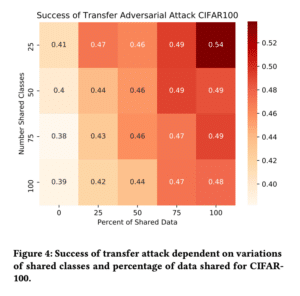

Overlap,” he and his team decided to see if there was a difference in attack outcomes with varying degrees of data and class overlap. Overall, the team found that less class overlap and less data overlap decreased the attack success rate. However, this wasn’t a perfectly linear distribution — the results varied as the percentage of data changed, so this finding can’t necessarily be generalized.

Figure 4 from “Adversarial Transfer Attacks With Unknown Data and Class Overlap”

Real-World Factors of Malware

One of the downsides of these experiments is that they’re very expensive models to run. Edward is ideating on ways to optimize the process, like trying to classify a region instead of a single data point.

When consulting for BAH clients, Edward’s job is to narrow down the nitty-gritty malware author specifics in order to improve his model’s performance. The first step is identifying specific models that could be attacked, like a fraud detection model at a bank, and focusing only on protecting those models. This then allows Edward to build specific models that best fit the problem at hand.

“The way that I help our clients approach these kinds of problems is to really focus on that scope and narrow it down. Where do we actually need to do this? Let’s not panic and think that everything’s under attack all the time because that’s not realistic and you’re going to give yourself a heart attack.”

Graph Neural Networks and Adversarial Malware

Edward is especially excited about applying graph neural networks to cybersecurity problems. While they’re not yet fast or scalable enough to handle the scale of malware use cases, Edward sees potential in their ability to connect executable features on nodes and edges of the graph that could be meaningful later.